Chapter 2 Manipulate and Summarize Dataframes

2.1 Variables in Dataframes

2.1.1 Generate Dataframe

Go back to fan’s REconTools research support package, R4Econ examples page, PkgTestR packaging guide, or Stat4Econ course page.

2.1.1.1 Simple Dataframe, Name Columns

# 5 by 3 matrix

mt_rnorm_a <- matrix(rnorm(4,mean=0,sd=1), nrow=5, ncol=3)

# Column Names

ar_st_varnames <- c('id','var1','varb','vartheta')

# Combine to tibble, add name col1, col2, etc.

tb_combine <- as_tibble(mt_rnorm_a) %>%

rowid_to_column(var = "id") %>%

rename_all(~c(ar_st_varnames))

# Display

kable(tb_combine) %>% kable_styling_fc()| id | var1 | varb | vartheta |

|---|---|---|---|

| 1 | -1.1655448 | -0.8185157 | 0.6849361 |

| 2 | -0.8185157 | 0.6849361 | -0.3200564 |

| 3 | 0.6849361 | -0.3200564 | -1.1655448 |

| 4 | -0.3200564 | -1.1655448 | -0.8185157 |

| 5 | -1.1655448 | -0.8185157 | 0.6849361 |

2.1.1.2 Dataframe with Row and Column Names and Export

First, we generate an empty matrix. Second, we compute values to fill in matrix cells.

# an NA matrix

it_nrow <- 5

it_ncol <- 3

mt_na <- matrix(NA, nrow=it_nrow, ncol=it_ncol)

# array of nrow values

ar_it_nrow <- seq(1, it_nrow)

ar_it_ncol <- seq(1, it_ncol)

# Generate values in matrix

for (it_row in ar_it_nrow) {

for (it_col in ar_it_ncol) {

print(glue::glue("row={it_row} and col={it_col}"))

mt_na[it_row, it_col] = it_row*it_col + it_row + it_col

}

}## row=1 and col=1

## row=1 and col=2

## row=1 and col=3

## row=2 and col=1

## row=2 and col=2

## row=2 and col=3

## row=3 and col=1

## row=3 and col=2

## row=3 and col=3

## row=4 and col=1

## row=4 and col=2

## row=4 and col=3

## row=5 and col=1

## row=5 and col=2

## row=5 and col=3| 3 | 5 | 7 |

| 5 | 8 | 11 |

| 7 | 11 | 15 |

| 9 | 14 | 19 |

| 11 | 17 | 23 |

Third, we label the rows and the columns. Note that we will include the column names as column names, but the row names will be included as a variable.

# Column Names

ar_st_col_names <- paste0('colval=', ar_it_ncol)

ar_st_row_names <- paste0('rowval=', ar_it_nrow)

# Create tibble, and add in column and row names

tb_row_col_named <- as_tibble(mt_na) %>%

rename_all(~c(ar_st_col_names)) %>%

mutate(row_name = ar_st_row_names) %>%

select(row_name, everything())

# Display

kable(tb_row_col_named) %>% kable_styling_fc()| row_name | colval=1 | colval=2 | colval=3 |

|---|---|---|---|

| rowval=1 | 3 | 5 | 7 |

| rowval=2 | 5 | 8 | 11 |

| rowval=3 | 7 | 11 | 15 |

| rowval=4 | 9 | 14 | 19 |

| rowval=5 | 11 | 17 | 23 |

Finally, we generate a file name for exporting this tibble to a CSV file. We create a file name with a time stamp.

# Create a file name with date stamp

st_datetime <- base::format(Sys.time(), "%Y%m%d-%H%M%S")

# Copying a fixed date to avoid generating multiple testing files

# The date string below is generated by Sys.time()

st_snm_filename <- paste0("tibble_out_test_", st_datetime, '.csv')

# Create a file name with the time stamp.

spn_file_path = file.path(

"C:", "Users", "fan",

"R4Econ", "amto", "tibble", "_file",

st_snm_filename,

fsep = .Platform$file.sep)

# Save to file

write_csv(tb_row_col_named, spn_file_path)2.1.1.3 Generate Tibble given Matrixes and Arrays

Given Arrays and Matrixes, Generate Tibble and Name Variables/Columns

- naming tibble columns

- tibble variable names

- dplyr rename tibble

- dplyr rename tibble all variables

- dplyr rename all columns by index

- dplyr tibble add index column

- see also: SO-51205520

# Base Inputs

ar_col <- c(-1,+1)

mt_rnorm_a <- matrix(rnorm(4,mean=0,sd=1), nrow=2, ncol=2)

mt_rnorm_b <- matrix(rnorm(4,mean=0,sd=1), nrow=2, ncol=4)

# Combine Matrix

mt_combine <- cbind(ar_col, mt_rnorm_a, mt_rnorm_b)

colnames(mt_combine) <- c('ar_col',

paste0('matcolvar_grpa_', seq(1,dim(mt_rnorm_a)[2])),

paste0('matcolvar_grpb_', seq(1,dim(mt_rnorm_b)[2])))

# Variable Names

ar_st_varnames <- c('var_one',

paste0('tibcolvar_ga_', c(1,2)),

paste0('tibcolvar_gb_', c(1,2,3,4)))

# Combine to tibble, add name col1, col2, etc.

tb_combine <- as_tibble(mt_combine) %>% rename_all(~c(ar_st_varnames))

# Add an index column to the dataframe, ID column

tb_combine <- tb_combine %>% rowid_to_column(var = "ID")

# Change all gb variable names

tb_combine <- tb_combine %>%

rename_at(vars(starts_with("tibcolvar_gb_")),

funs(str_replace(., "_gb_", "_gbrenamed_")))

# Tibble back to matrix

mt_tb_combine_back <- data.matrix(tb_combine)

# Display

kable(mt_combine) %>% kable_styling_fc_wide()| ar_col | matcolvar_grpa_1 | matcolvar_grpa_2 | matcolvar_grpb_1 | matcolvar_grpb_2 | matcolvar_grpb_3 | matcolvar_grpb_4 |

|---|---|---|---|---|---|---|

| -1 | -1.3115224 | -0.1294107 | -0.1513960 | -3.2273228 | -0.1513960 | -3.2273228 |

| 1 | -0.5996083 | 0.8867361 | 0.3297912 | -0.7717918 | 0.3297912 | -0.7717918 |

| ID | var_one | tibcolvar_ga_1 | tibcolvar_ga_2 | tibcolvar_gbrenamed_1 | tibcolvar_gbrenamed_2 | tibcolvar_gbrenamed_3 | tibcolvar_gbrenamed_4 |

|---|---|---|---|---|---|---|---|

| 1 | -1 | -1.3115224 | -0.1294107 | -0.1513960 | -3.2273228 | -0.1513960 | -3.2273228 |

| 2 | 1 | -0.5996083 | 0.8867361 | 0.3297912 | -0.7717918 | 0.3297912 | -0.7717918 |

| ID | var_one | tibcolvar_ga_1 | tibcolvar_ga_2 | tibcolvar_gbrenamed_1 | tibcolvar_gbrenamed_2 | tibcolvar_gbrenamed_3 | tibcolvar_gbrenamed_4 |

|---|---|---|---|---|---|---|---|

| 1 | -1 | -1.3115224 | -0.1294107 | -0.1513960 | -3.2273228 | -0.1513960 | -3.2273228 |

| 2 | 1 | -0.5996083 | 0.8867361 | 0.3297912 | -0.7717918 | 0.3297912 | -0.7717918 |

2.1.1.4 Generate a Table from Lists

We run some function, whose outputs are named list, we store the values of the named list as additional rows into a dataframe whose column names are the names from named list.

First, we generate the function that returns named lists.

# Define a function

ffi_list_generator <- function(it_seed=123) {

set.seed(it_seed)

fl_abc <- rnorm(1)

ar_efg <- rnorm(3)

st_word <- sample(LETTERS, 5, replace = TRUE)

ls_return <- list("abc" = fl_abc, "efg" = ar_efg, "opq" = st_word)

return(ls_return)

}

# Run the function

it_seed=123

ls_return <- ffi_list_generator(it_seed)

print(ls_return)## $abc

## [1] -0.5604756

##

## $efg

## [1] -0.23017749 1.55870831 0.07050839

##

## $opq

## [1] "K" "E" "T" "N" "V"Second, we list of lists by running the function above with different starting seeds. We store results in a two-dimensional list.

# Run function once to get length

ls_return_test <- ffi_list_generator(it_seed=123)

it_list_len <- length(ls_return_test)

# list of list frame

it_list_of_list_len <- 5

ls_ls_return <- vector(mode = "list", length = it_list_of_list_len*it_list_len)

dim(ls_ls_return) <- c(it_list_of_list_len, it_list_len)

# Fill list of list

ar_seeds <- seq(123, 123 + it_list_of_list_len - 1)

it_ctr <- 0

for (it_seed in ar_seeds) {

print(it_seed)

it_ctr <- it_ctr + 1

ls_return <- ffi_list_generator(it_seed)

ls_ls_return[it_ctr,] <- ls_return

}## [1] 123

## [1] 124

## [1] 125

## [1] 126

## [1] 127## [,1] [,2] [,3]

## [1,] -0.5604756 numeric,3 character,5

## [2,] -1.385071 numeric,3 character,5

## [3,] 0.933327 numeric,3 character,5

## [4,] 0.366734 numeric,3 character,5

## [5,] -0.5677337 numeric,3 character,5Third, we convert the list to a tibble dataframe. Prior to conversion we add names to the 1st and 2nd dimensions of the list. Then we print the results.

# get names from named list

ar_st_names <- names(ls_return_test)

dimnames(ls_ls_return)[[2]] <- ar_st_names

dimnames(ls_ls_return)[[1]] <- paste0('seed_', ar_seeds)

# Convert to dataframe

tb_ls_ls_return <- as_tibble(ls_ls_return)

# print

kable(tb_ls_ls_return) %>% kable_styling_fc()| abc | efg | opq |

|---|---|---|

| -0.5604756 | -0.23017749, 1.55870831, 0.07050839 | K, E, T, N, V |

| -1.385071 | 0.03832318, -0.76303016, 0.21230614 | J, A, O, T, N |

| 0.933327 | -0.52503178, 1.81443979, 0.08304562 | C, T, M, S, K |

| 0.366734 | 0.3964520, -0.7318437, 0.9462364 | Z, L, J, Y, P |

| -0.5677337 | -0.814760579, -0.493939596, 0.001818846 | Y, C, O, F, U |

Fourth, to export list to csv file, we need to unlist list contents. See also Create a tibble containing list columns

2.1.1.5 Rename Tibble with Numeric Column Names

After reshaping, often could end up with variable names that are all numeric, intgers for example, how to rename these variables to add a common prefix for example.

# Base Inputs

ar_col <- c(-1,+1)

mt_rnorm_c <- matrix(rnorm(4,mean=0,sd=1), nrow=5, ncol=10)

mt_combine <- cbind(ar_col, mt_rnorm_c)

# Variable Names

ar_it_cols_ctr <- seq(1, dim(mt_rnorm_c)[2])

ar_st_varnames <- c('var_one', ar_it_cols_ctr)

# Combine to tibble, add name col1, col2, etc.

tb_combine <- as_tibble(mt_combine) %>% rename_all(~c(ar_st_varnames))

# Add an index column to the dataframe, ID column

tb_combine_ori <- tb_combine %>% rowid_to_column(var = "ID")

# Change all gb variable names

tb_combine <- tb_combine_ori %>%

rename_at(

vars(num_range('',ar_it_cols_ctr)),

funs(paste0("rho", . , 'var'))

)

# Display

kable(tb_combine_ori) %>% kable_styling_fc_wide()| ID | var_one | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 |

|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | -1 | -0.1255472 | 0.5646199 | 0.1335086 | -0.1059632 | -0.1255472 | 0.5646199 | 0.1335086 | -0.1059632 | -0.1255472 | 0.5646199 |

| 2 | 1 | 0.5646199 | 0.1335086 | -0.1059632 | -0.1255472 | 0.5646199 | 0.1335086 | -0.1059632 | -0.1255472 | 0.5646199 | 0.1335086 |

| 3 | -1 | 0.1335086 | -0.1059632 | -0.1255472 | 0.5646199 | 0.1335086 | -0.1059632 | -0.1255472 | 0.5646199 | 0.1335086 | -0.1059632 |

| 4 | 1 | -0.1059632 | -0.1255472 | 0.5646199 | 0.1335086 | -0.1059632 | -0.1255472 | 0.5646199 | 0.1335086 | -0.1059632 | -0.1255472 |

| 5 | -1 | -0.1255472 | 0.5646199 | 0.1335086 | -0.1059632 | -0.1255472 | 0.5646199 | 0.1335086 | -0.1059632 | -0.1255472 | 0.5646199 |

| ID | var_one | rho1var | rho2var | rho3var | rho4var | rho5var | rho6var | rho7var | rho8var | rho9var | rho10var |

|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | -1 | -0.1255472 | 0.5646199 | 0.1335086 | -0.1059632 | -0.1255472 | 0.5646199 | 0.1335086 | -0.1059632 | -0.1255472 | 0.5646199 |

| 2 | 1 | 0.5646199 | 0.1335086 | -0.1059632 | -0.1255472 | 0.5646199 | 0.1335086 | -0.1059632 | -0.1255472 | 0.5646199 | 0.1335086 |

| 3 | -1 | 0.1335086 | -0.1059632 | -0.1255472 | 0.5646199 | 0.1335086 | -0.1059632 | -0.1255472 | 0.5646199 | 0.1335086 | -0.1059632 |

| 4 | 1 | -0.1059632 | -0.1255472 | 0.5646199 | 0.1335086 | -0.1059632 | -0.1255472 | 0.5646199 | 0.1335086 | -0.1059632 | -0.1255472 |

| 5 | -1 | -0.1255472 | 0.5646199 | 0.1335086 | -0.1059632 | -0.1255472 | 0.5646199 | 0.1335086 | -0.1059632 | -0.1255472 | 0.5646199 |

2.1.1.6 Tibble Row and Column and Summarize

Show what is in the table: 1, column and row names; 2, contents inside table.

## [1] "1" "2" "3" "4" "5" "6" "7" "8" "9" "10" "11" "12" "13"

## [14] "14" "15" "16" "17" "18" "19" "20" "21" "22" "23" "24" "25" "26"

## [27] "27" "28" "29" "30" "31" "32" "33" "34" "35" "36" "37" "38" "39"

## [40] "40" "41" "42" "43" "44" "45" "46" "47" "48" "49" "50" "51" "52"

## [53] "53" "54" "55" "56" "57" "58" "59" "60" "61" "62" "63" "64" "65"

## [66] "66" "67" "68" "69" "70" "71" "72" "73" "74" "75" "76" "77" "78"

## [79] "79" "80" "81" "82" "83" "84" "85" "86" "87" "88" "89" "90" "91"

## [92] "92" "93" "94" "95" "96" "97" "98" "99" "100" "101" "102" "103" "104"

## [105] "105" "106" "107" "108" "109" "110" "111" "112" "113" "114" "115" "116" "117"

## [118] "118" "119" "120" "121" "122" "123" "124" "125" "126" "127" "128" "129" "130"

## [131] "131" "132" "133" "134" "135" "136" "137" "138" "139" "140" "141" "142" "143"

## [144] "144" "145" "146" "147" "148" "149" "150"## [1] "Sepal.Length" "Sepal.Width" "Petal.Length" "Petal.Width" "Species"## [1] "Sepal.Length" "Sepal.Width" "Petal.Length" "Petal.Width" "Species"## Sepal.Length Sepal.Width Petal.Length Petal.Width Species

## Min. :4.300 Min. :2.000 Min. :1.000 Min. :0.100 setosa :50

## 1st Qu.:5.100 1st Qu.:2.800 1st Qu.:1.600 1st Qu.:0.300 versicolor:50

## Median :5.800 Median :3.000 Median :4.350 Median :1.300 virginica :50

## Mean :5.843 Mean :3.057 Mean :3.758 Mean :1.199

## 3rd Qu.:6.400 3rd Qu.:3.300 3rd Qu.:5.100 3rd Qu.:1.800

## Max. :7.900 Max. :4.400 Max. :6.900 Max. :2.5002.1.1.7 Sorting and Rank

2.1.1.7.1 Sorting

- dplyr arrange desc reverse

- dplyr sort

# Sort in Ascending Order

tb_iris %>% select(Species, Sepal.Length, everything()) %>%

arrange(Species, Sepal.Length) %>% head(10) %>%

kable() %>% kable_styling_fc()| Species | Sepal.Length | Sepal.Width | Petal.Length | Petal.Width |

|---|---|---|---|---|

| setosa | 4.3 | 3.0 | 1.1 | 0.1 |

| setosa | 4.4 | 2.9 | 1.4 | 0.2 |

| setosa | 4.4 | 3.0 | 1.3 | 0.2 |

| setosa | 4.4 | 3.2 | 1.3 | 0.2 |

| setosa | 4.5 | 2.3 | 1.3 | 0.3 |

| setosa | 4.6 | 3.1 | 1.5 | 0.2 |

| setosa | 4.6 | 3.4 | 1.4 | 0.3 |

| setosa | 4.6 | 3.6 | 1.0 | 0.2 |

| setosa | 4.6 | 3.2 | 1.4 | 0.2 |

| setosa | 4.7 | 3.2 | 1.3 | 0.2 |

# Sort in Descending Order

tb_iris %>% select(Species, Sepal.Length, everything()) %>%

arrange(desc(Species), desc(Sepal.Length)) %>% head(10) %>%

kable() %>% kable_styling_fc()| Species | Sepal.Length | Sepal.Width | Petal.Length | Petal.Width |

|---|---|---|---|---|

| virginica | 7.9 | 3.8 | 6.4 | 2.0 |

| virginica | 7.7 | 3.8 | 6.7 | 2.2 |

| virginica | 7.7 | 2.6 | 6.9 | 2.3 |

| virginica | 7.7 | 2.8 | 6.7 | 2.0 |

| virginica | 7.7 | 3.0 | 6.1 | 2.3 |

| virginica | 7.6 | 3.0 | 6.6 | 2.1 |

| virginica | 7.4 | 2.8 | 6.1 | 1.9 |

| virginica | 7.3 | 2.9 | 6.3 | 1.8 |

| virginica | 7.2 | 3.6 | 6.1 | 2.5 |

| virginica | 7.2 | 3.2 | 6.0 | 1.8 |

2.1.1.7.2 Create a Ranking Variable

We use dplyr’s ranking functions to generate different types of ranking variables.

The example below demonstrates the differences between the functions row_number(), min_rank(), and dense_rank().

- row_number: Given 10 observations, generates index from 1 to 10, ties are given different ranks.

- min_rank: Given 10 observations, generates rank where second-rank ties are both given “silver”, and the 4th highest ranked variable not given medal.

- dense_rank: Given 10 observations, generates rank where second-rank ties are both given “silver” (2nd rank), and the 4th highest ranked variable is given “bronze” (3rd rank), there are no gaps between ranks.

Note that we have “desc(var_name)” in order to generate the variable based on descending sort of the the “var_name” variable.

tb_iris %>%

select(Species, Sepal.Length) %>% head(10) %>%

mutate(row_number = row_number(desc(Sepal.Length)),

min_rank = min_rank(desc(Sepal.Length)),

dense_rank = dense_rank(desc(Sepal.Length))) %>%

kable(caption = "Ranking variable") %>% kable_styling_fc()| Species | Sepal.Length | row_number | min_rank | dense_rank |

|---|---|---|---|---|

| setosa | 5.1 | 2 | 2 | 2 |

| setosa | 4.9 | 5 | 5 | 4 |

| setosa | 4.7 | 7 | 7 | 5 |

| setosa | 4.6 | 8 | 8 | 6 |

| setosa | 5.0 | 3 | 3 | 3 |

| setosa | 5.4 | 1 | 1 | 1 |

| setosa | 4.6 | 9 | 8 | 6 |

| setosa | 5.0 | 4 | 3 | 3 |

| setosa | 4.4 | 10 | 10 | 7 |

| setosa | 4.9 | 6 | 5 | 4 |

2.1.1.8 REconTools Summarize over Tible

Use R4Econ’s summary tool.

df_summ_stats <- REconTools::ff_summ_percentiles(tb_iris)

kable(t(df_summ_stats)) %>% kable_styling_fc_wide()| stats | n | unique | NAobs | ZEROobs | mean | sd | cv | min | p01 | p05 | p10 | p25 | p50 | p75 | p90 | p95 | p99 | max |

| Petal.Length | 150 | 43 | 0 | 0 | 3.758000 | 1.7652982 | 0.4697441 | 1.0 | 1.149 | 1.300 | 1.4 | 1.6 | 4.35 | 5.1 | 5.80 | 6.100 | 6.700 | 6.9 |

| Petal.Width | 150 | 22 | 0 | 0 | 1.199333 | 0.7622377 | 0.6355511 | 0.1 | 0.100 | 0.200 | 0.2 | 0.3 | 1.30 | 1.8 | 2.20 | 2.300 | 2.500 | 2.5 |

| Sepal.Length | 150 | 35 | 0 | 0 | 5.843333 | 0.8280661 | 0.1417113 | 4.3 | 4.400 | 4.600 | 4.8 | 5.1 | 5.80 | 6.4 | 6.90 | 7.255 | 7.700 | 7.9 |

| Sepal.Width | 150 | 23 | 0 | 0 | 3.057333 | 0.4358663 | 0.1425642 | 2.0 | 2.200 | 2.345 | 2.5 | 2.8 | 3.00 | 3.3 | 3.61 | 3.800 | 4.151 | 4.4 |

2.1.2 Generate Categorical Variables

Go back to fan’s REconTools research support package, R4Econ examples page, PkgTestR packaging guide, or Stat4Econ course page.

2.1.2.1 Cut Continuous Variable to Categorical Variable

We have a continuous variable, we cut it with explicitly specified cuts to generate a categorical variable, and label it. We will use base::cut().

# break points to specific

fl_min_mpg <- min(mtcars$mpg)

fl_max_mpg <- max(mtcars$mpg)

ar_fl_cuts <- c(10, 20, 30, 40)

# generate labels

ar_st_cuts_lab <- c("10<=mpg<20", "20<=mpg<30", "30<=mpg<40")

# generate new variable

mtcars_cate <- mtcars %>%

tibble::rownames_to_column(var = "cars") %>%

mutate(mpg_grp = base::cut(mpg,

breaks = ar_fl_cuts,

labels = ar_st_cuts_lab,

# if right is FALSE, interval is closed on the left

right = FALSE

)

) %>% select(cars, mpg_grp, mpg) %>%

arrange(mpg) %>% group_by(mpg_grp) %>%

slice_head(n=3)

# Display

st_caption <- "Cuts a continuous var to a categorical var with labels"

kable(mtcars_cate,

caption = st_caption

) %>% kable_styling_fc()| cars | mpg_grp | mpg |

|---|---|---|

| Cadillac Fleetwood | 10<=mpg<20 | 10.4 |

| Lincoln Continental | 10<=mpg<20 | 10.4 |

| Camaro Z28 | 10<=mpg<20 | 13.3 |

| Mazda RX4 | 20<=mpg<30 | 21.0 |

| Mazda RX4 Wag | 20<=mpg<30 | 21.0 |

| Hornet 4 Drive | 20<=mpg<30 | 21.4 |

| Honda Civic | 30<=mpg<40 | 30.4 |

| Lotus Europa | 30<=mpg<40 | 30.4 |

| Fiat 128 | 30<=mpg<40 | 32.4 |

2.1.2.2 Factor, Label, Cross and Graph

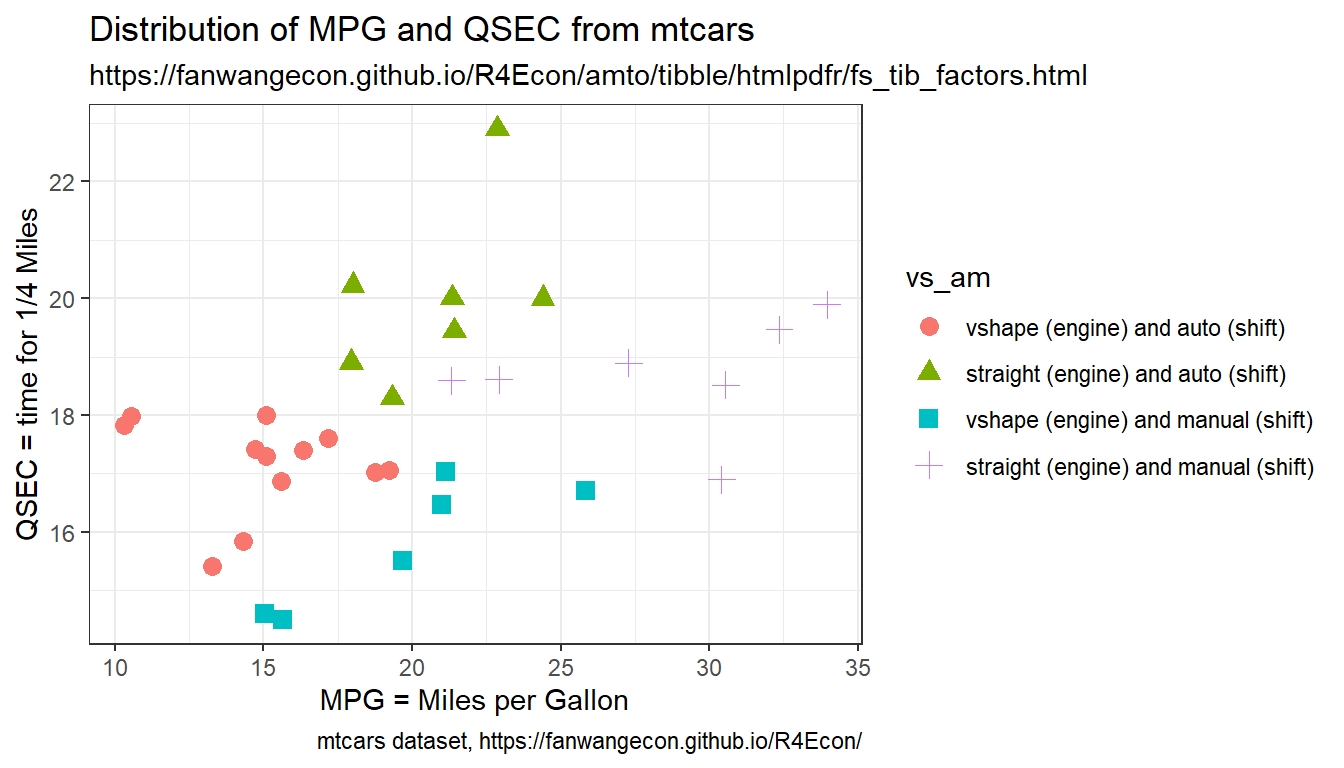

Generate a Scatter plot with different colors representing different categories. There are multiple underlying factor/categorical variables, for example two binary variables. Generate scatter plot with colors for the combinations of these two binary variables.

We combine here the vs and am variables from the mtcars dataset. vs is engine shape, am is auto or manual shift. We will generate a scatter plot of mpg and qsec over four categories with different colors.

- am: Transmission (0 = automatic, 1 = manual)

- vs: Engine (0 = V-shaped, 1 = straight)

- mpg: miles per galon

- qsec: 1/4 mile time

# First make sure these are factors

tb_mtcars <- as_tibble(mtcars) %>%

mutate(vs = as_factor(vs), am = as_factor(am))

# Second Label the Factors

am_levels <- c(auto_shift = "0", manual_shift = "1")

vs_levels <- c(vshaped_engine = "0", straight_engine = "1")

tb_mtcars <- tb_mtcars %>%

mutate(vs = fct_recode(vs, !!!vs_levels),

am = fct_recode(am, !!!am_levels))

# Third Combine Factors

tb_mtcars_selected <- tb_mtcars %>%

mutate(vs_am = fct_cross(vs, am, sep='_', keep_empty = FALSE)) %>%

select(mpg, qsec, vs_am)

# relabel interaction variables

am_vs_levels <- c("vshape (engine) and auto (shift)" = "vshaped_engine_auto_shift",

"vshape (engine) and manual (shift)" = "vshaped_engine_manual_shift",

"straight (engine) and auto (shift)" = "straight_engine_auto_shift",

"straight (engine) and manual (shift)" = "straight_engine_manual_shift")

tb_mtcars_selected <- tb_mtcars_selected %>%

mutate(vs_am = fct_recode(vs_am, !!!am_vs_levels))

# Show

print(tb_mtcars_selected[1:10,])Now we generate scatter plot based on the combined factors

# Labeling

st_title <- paste0('Distribution of MPG and QSEC from mtcars')

st_subtitle <- paste0('https://fanwangecon.github.io/',

'R4Econ/amto/tibble/htmlpdfr/fs_tib_factors.html')

st_caption <- paste0('mtcars dataset, ',

'https://fanwangecon.github.io/R4Econ/')

st_x_label <- 'MPG = Miles per Gallon'

st_y_label <- 'QSEC = time for 1/4 Miles'

# Graphing

plt_mtcars_scatter <-

ggplot(tb_mtcars_selected,

aes(x=mpg, y=qsec, colour=vs_am, shape=vs_am)) +

geom_jitter(size=3, width = 0.15) +

labs(title = st_title, subtitle = st_subtitle,

x = st_x_label, y = st_y_label, caption = st_caption) +

theme_bw()

# show

print(plt_mtcars_scatter)

2.1.3 Drawly Random Rows

Go back to fan’s REconTools research support package, R4Econ examples page, PkgTestR packaging guide, or Stat4Econ course page.

2.1.3.1 Draw Random Subset of Sample

- r random discrete

We have a sample of N individuals in some dataframe. Draw without replacement a subset \(M<N\) of rows.

# parameters, it_M < it_N

it_N <- 10

it_M <- 5

# Draw it_m from indexed list of it_N

set.seed(123)

ar_it_rand_idx <- sample(it_N, it_M, replace=FALSE)

# dataframe

df_full <- as_tibble(matrix(rnorm(4,mean=0,sd=1), nrow=it_N, ncol=4)) %>% rowid_to_column(var = "ID")

# random Subset

df_rand_sub_a <- df_full[ar_it_rand_idx,]

# Random subset also

df_rand_sub_b <- df_full[sample(dim(df_full)[1], it_M, replace=FALSE),]

# Print

# Display

kable(df_full) %>% kable_styling_fc()| ID | V1 | V2 | V3 | V4 |

|---|---|---|---|---|

| 1 | 0.1292877 | 0.4609162 | 0.1292877 | 0.4609162 |

| 2 | 1.7150650 | -1.2650612 | 1.7150650 | -1.2650612 |

| 3 | 0.4609162 | 0.1292877 | 0.4609162 | 0.1292877 |

| 4 | -1.2650612 | 1.7150650 | -1.2650612 | 1.7150650 |

| 5 | 0.1292877 | 0.4609162 | 0.1292877 | 0.4609162 |

| 6 | 1.7150650 | -1.2650612 | 1.7150650 | -1.2650612 |

| 7 | 0.4609162 | 0.1292877 | 0.4609162 | 0.1292877 |

| 8 | -1.2650612 | 1.7150650 | -1.2650612 | 1.7150650 |

| 9 | 0.1292877 | 0.4609162 | 0.1292877 | 0.4609162 |

| 10 | 1.7150650 | -1.2650612 | 1.7150650 | -1.2650612 |

| ID | V1 | V2 | V3 | V4 |

|---|---|---|---|---|

| 3 | 0.4609162 | 0.1292877 | 0.4609162 | 0.1292877 |

| 10 | 1.7150650 | -1.2650612 | 1.7150650 | -1.2650612 |

| 2 | 1.7150650 | -1.2650612 | 1.7150650 | -1.2650612 |

| 8 | -1.2650612 | 1.7150650 | -1.2650612 | 1.7150650 |

| 6 | 1.7150650 | -1.2650612 | 1.7150650 | -1.2650612 |

| ID | V1 | V2 | V3 | V4 |

|---|---|---|---|---|

| 5 | 0.1292877 | 0.4609162 | 0.1292877 | 0.4609162 |

| 3 | 0.4609162 | 0.1292877 | 0.4609162 | 0.1292877 |

| 9 | 0.1292877 | 0.4609162 | 0.1292877 | 0.4609162 |

| 1 | 0.1292877 | 0.4609162 | 0.1292877 | 0.4609162 |

| 4 | -1.2650612 | 1.7150650 | -1.2650612 | 1.7150650 |

2.1.3.2 Random Subset of Panel

There are \(N\) individuals, each could be observed \(M\) times, but then select a subset of rows only, so each person is randomly observed only a subset of times. Specifically, there there are 3 unique students with student ids, and the second variable shows the random dates in which the student showed up in class, out of the 10 classes available.

# Define

it_N <- 3

it_M <- 10

svr_id <- 'student_id'

# dataframe

set.seed(123)

df_panel_rand <- as_tibble(matrix(it_M, nrow=it_N, ncol=1)) %>%

rowid_to_column(var = svr_id) %>%

uncount(V1) %>%

group_by(!!sym(svr_id)) %>% mutate(date = row_number()) %>%

ungroup() %>% mutate(in_class = case_when(rnorm(n(),mean=0,sd=1) < 0 ~ 1, TRUE ~ 0)) %>%

dplyr::filter(in_class == 1) %>% select(!!sym(svr_id), date) %>%

rename(date_in_class = date)

# Print

kable(df_panel_rand) %>% kable_styling_fc()| student_id | date_in_class |

|---|---|

| 1 | 1 |

| 1 | 2 |

| 1 | 8 |

| 1 | 9 |

| 1 | 10 |

| 2 | 5 |

| 2 | 8 |

| 2 | 10 |

| 3 | 1 |

| 3 | 2 |

| 3 | 3 |

| 3 | 4 |

| 3 | 5 |

| 3 | 6 |

| 3 | 9 |

2.1.4 Generate Variables Conditional On Others

Go back to fan’s REconTools research support package, R4Econ examples page, PkgTestR packaging guide, or Stat4Econ course page.

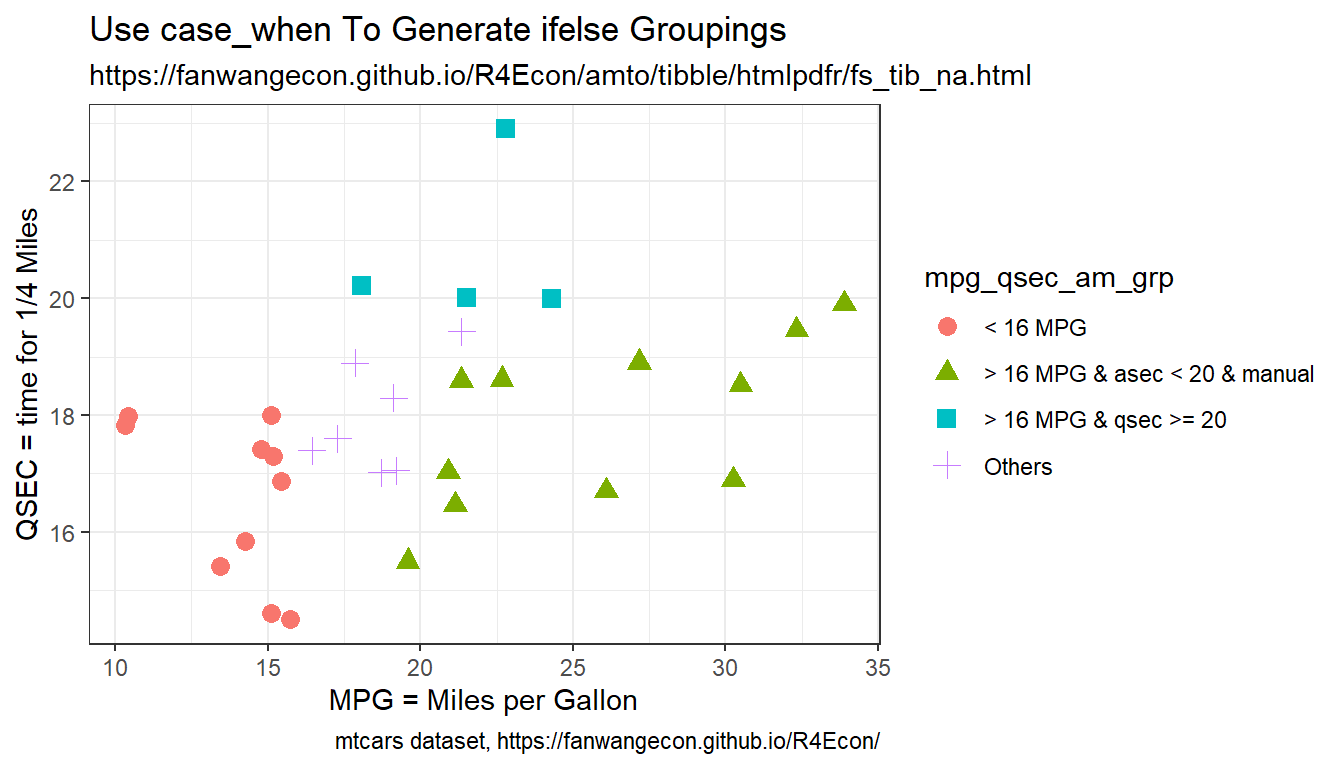

2.1.4.1 Categorical Variable based on Several Variables

Given several other variables, and generate a new variable when these varaibles satisfy conditions. Note that case_when are ifelse type statements. So below

- group one is below 16 MPG

- when do qsec >= 20 second line that is elseif, only those that are >=16 are considered here

- then think about two dimensional mpg and qsec grid, the lower-right area, give another category to manual cars in that group

First, we generate categorical variables based on the characteristics of several variables.

# Get mtcars

df_mtcars <- mtcars

# case_when with mtcars

df_mtcars <- df_mtcars %>%

mutate(

mpg_qsec_am_grp =

case_when(

mpg < 16 ~ "< 16 MPG",

qsec >= 20 ~ "> 16 MPG & qsec >= 20",

am == 1 ~ "> 16 MPG & asec < 20 & manual",

TRUE ~ "Others"

)

)Now we generate scatter plot based on the combined factors

# Labeling

st_title <- paste0("Use case_when To Generate ifelse Groupings")

st_subtitle <- paste0(

"https://fanwangecon.github.io/",

"R4Econ/amto/tibble/htmlpdfr/fs_tib_na.html"

)

st_caption <- paste0(

"mtcars dataset, ",

"https://fanwangecon.github.io/R4Econ/"

)

st_x_label <- "MPG = Miles per Gallon"

st_y_label <- "QSEC = time for 1/4 Miles"

# Graphing

plt_mtcars_casewhen_scatter <-

ggplot(

df_mtcars,

aes(

x = mpg, y = qsec,

colour = mpg_qsec_am_grp,

shape = mpg_qsec_am_grp

)

) +

geom_jitter(size = 3, width = 0.15) +

labs(

title = st_title, subtitle = st_subtitle,

x = st_x_label, y = st_y_label, caption = st_caption

) +

theme_bw()

# show

print(plt_mtcars_casewhen_scatter)

2.1.4.2 Categorical Variables based on one Continuous Variable

We generate one categorical variable for gear, based on “continuous” gear values. Note that the same categorical label appears for gear is 3 as well as gear is 5.

# Generate a categorical variable

df_mtcars <- df_mtcars %>%

mutate(gear_cate = case_when(

gear == 3 ~ "gear is 3",

gear == 4 ~ "gear is 4",

gear == 5 & hp <= 110 ~ "gear 5 hp les sequal 110",

gear == 5 & hp > 110 & hp <= 200 ~ "gear 5 hp 110 to 130",

TRUE ~ "otherwise"

))

# Tabulate

df_mtcars_gear_tb <- df_mtcars %>%

group_by(gear_cate, gear) %>%

tally() %>%

spread(gear_cate, n)

# Display

st_title <- "Categorical from continuous with non-continuous values matching to same key"

df_mtcars_gear_tb %>% kable(caption = st_title) %>%

kable_styling_fc()| gear | gear 5 hp 110 to 130 | gear 5 hp les sequal 110 | gear is 3 | gear is 4 | otherwise |

|---|---|---|---|---|---|

| 3 | NA | NA | 15 | NA | NA |

| 4 | NA | NA | NA | 12 | NA |

| 5 | 2 | 1 | NA | NA | 2 |

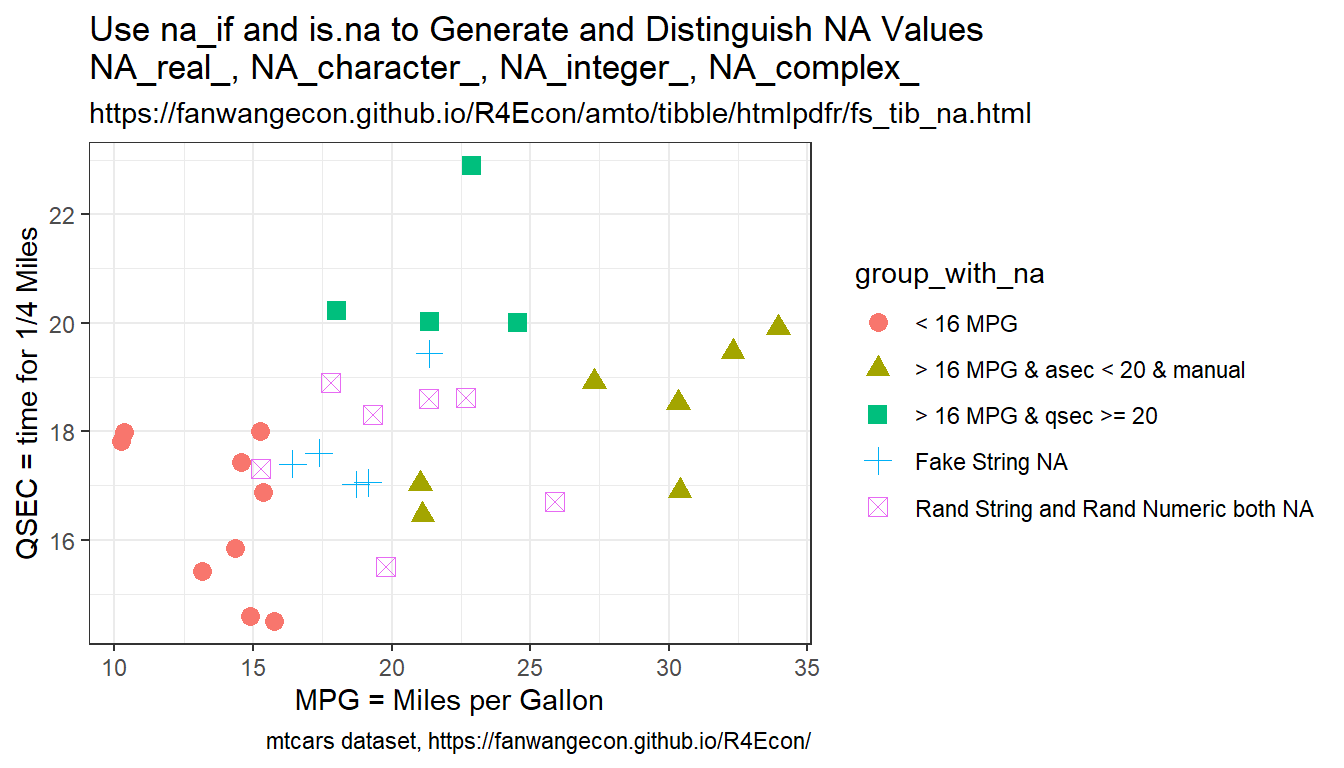

2.1.4.3 Generate NA values if Variables have Certain Value

In the example below, in one line:

- generate a random standard normal vector

- two set na methods:

- if the value of the standard normal is negative, set value to -999, otherwise MPG, replace the value -999 with NA

- case_when only with type specific NA values

- Assigning NA yields error in case_when

- note we need to conform NA to type

- generate new categorical variable based on NA condition using is.na with both string and numeric NAs jointly considered.

- fake NA string to be printed on chart

# Get mtcars

df_mtcars <- mtcars

# Make some values of mpg randomly NA

# the NA has to conform to the type of the remaining values for the new variable

# NA_real_, NA_character_, NA_integer_, NA_complex_

set.seed(2341)

df_mtcars <- df_mtcars %>%

mutate(mpg_wth_NA1 = na_if(

case_when(

rnorm(n(), mean = 0, sd = 1) < 0 ~ -999,

TRUE ~ mpg

),

-999

)) %>%

mutate(mpg_wth_NA2 = case_when(

rnorm(n(), mean = 0, sd = 1) < 0 ~ NA_real_,

TRUE ~ mpg

)) %>%

mutate(mpg_wth_NA3 = case_when(

rnorm(n(), mean = 0, sd = 1) < 0 ~ NA_character_,

TRUE ~ "shock > 0 string"

))

# Generate New Variables based on if mpg_wth_NA is NA or not

# same variable as above, but now first a category based on if NA

# And we generate a fake string "NA" variable, this is not NA

# the String NA allows for it to be printed on figure

df_mtcars <- df_mtcars %>%

mutate(

group_with_na =

case_when(

is.na(mpg_wth_NA2) & is.na(mpg_wth_NA3) ~

"Rand String and Rand Numeric both NA",

mpg < 16 ~ "< 16 MPG",

qsec >= 20 ~ "> 16 MPG & qsec >= 20",

am == 1 ~ "> 16 MPG & asec < 20 & manual",

TRUE ~ "Fake String NA"

)

)

# show

kable(head(df_mtcars %>% select(starts_with("mpg")), 13)) %>%

kable_styling_fc()| mpg | mpg_wth_NA1 | mpg_wth_NA2 | mpg_wth_NA3 | |

|---|---|---|---|---|

| Mazda RX4 | 21.0 | NA | NA | shock > 0 string |

| Mazda RX4 Wag | 21.0 | 21.0 | 21.0 | NA |

| Datsun 710 | 22.8 | NA | NA | NA |

| Hornet 4 Drive | 21.4 | NA | 21.4 | NA |

| Hornet Sportabout | 18.7 | NA | 18.7 | NA |

| Valiant | 18.1 | 18.1 | NA | shock > 0 string |

| Duster 360 | 14.3 | 14.3 | NA | shock > 0 string |

| Merc 240D | 24.4 | NA | 24.4 | NA |

| Merc 230 | 22.8 | 22.8 | 22.8 | NA |

| Merc 280 | 19.2 | 19.2 | NA | NA |

| Merc 280C | 17.8 | NA | NA | NA |

| Merc 450SE | 16.4 | 16.4 | 16.4 | NA |

| Merc 450SL | 17.3 | NA | NA | shock > 0 string |

# # Setting to NA

# df.reg.use <- df.reg.guat %>% filter(!!sym(var.mth) != 0)

# df.reg.use.log <- df.reg.use

# df.reg.use.log[which(is.nan(df.reg.use$prot.imputed.log)),] = NA

# df.reg.use.log[which(df.reg.use$prot.imputed.log==Inf),] = NA

# df.reg.use.log[which(df.reg.use$prot.imputed.log==-Inf),] = NA

# df.reg.use.log <- df.reg.use.log %>% drop_na(prot.imputed.log)

# # df.reg.use.log$prot.imputed.logNow we generate scatter plot based on the combined factors, but now with the NA category

# Labeling

st_title <- paste0(

"Use na_if and is.na to Generate and Distinguish NA Values\n",

"NA_real_, NA_character_, NA_integer_, NA_complex_"

)

st_subtitle <- paste0(

"https://fanwangecon.github.io/",

"R4Econ/amto/tibble/htmlpdfr/fs_tib_na.html"

)

st_caption <- paste0(

"mtcars dataset, ",

"https://fanwangecon.github.io/R4Econ/"

)

st_x_label <- "MPG = Miles per Gallon"

st_y_label <- "QSEC = time for 1/4 Miles"

# Graphing

plt_mtcars_ifisna_scatter <-

ggplot(

df_mtcars,

aes(

x = mpg, y = qsec,

colour = group_with_na,

shape = group_with_na

)

) +

geom_jitter(size = 3, width = 0.15) +

labs(

title = st_title, subtitle = st_subtitle,

x = st_x_label, y = st_y_label, caption = st_caption

) +

theme_bw()

# show

print(plt_mtcars_ifisna_scatter)

2.1.4.4 Approximate Values Comparison

- r values almost the same

- all.equal

From numeric approximation, often values are very close, and should be set to equal. Use isTRUE(all.equal). In the example below, we randomly generates four arrays. Two of the arrays have slightly higher variance, two arrays have slightly lower variance. They sd are to be 10 times below or 10 times above the tolerance comparison level. The values are not the same in any of the columns, but by allowing for almost true given some tolerance level, in the low standard deviation case, the values differences are within tolerance, so they are equal.

This is an essential issue when dealing with optimization results.

# Set tolerance

tol_lvl <- 1.5e-3

sd_lower_than_tol <- tol_lvl / 10

sd_higher_than_tol <- tol_lvl * 10

# larger SD

set.seed(123)

mt_runif_standard <- matrix(rnorm(10, mean = 0, sd = sd_higher_than_tol), nrow = 5, ncol = 2)

# small SD

set.seed(123)

mt_rnorm_small_sd <- matrix(rnorm(10, mean = 0, sd = sd_lower_than_tol), nrow = 5, ncol = 2)

# Generates Random Matirx

tb_rnorm_runif <- as_tibble(cbind(mt_rnorm_small_sd, mt_runif_standard))

# Are Variables the same, not for strict comparison

tb_rnorm_runif_approxi_same <- tb_rnorm_runif %>%

mutate(

V1_V2_ALMOST_SAME =

case_when(

isTRUE(all.equal(V1, V2, tolerance = tol_lvl)) ~

paste0("TOL=", sd_lower_than_tol, ", SAME ALMOST"),

TRUE ~

paste0("TOL=", sd_lower_than_tol, ", NOT SAME ALMOST")

)

) %>%

mutate(

V3_V4_ALMOST_SAME =

case_when(

isTRUE(all.equal(V3, V4, tolerance = tol_lvl)) ~

paste0("TOL=", sd_higher_than_tol, ", SAME ALMOST"),

TRUE ~

paste0("TOL=", sd_higher_than_tol, ", NOT SAME ALMOST")

)

)

# Pring

kable(tb_rnorm_runif_approxi_same) %>% kable_styling_fc_wide()| V1 | V2 | V3 | V4 | V1_V2_ALMOST_SAME | V3_V4_ALMOST_SAME |

|---|---|---|---|---|---|

| -0.0000841 | 0.0002573 | -0.0084071 | 0.0257260 | TOL=0.00015, SAME ALMOST | TOL=0.015, NOT SAME ALMOST |

| -0.0000345 | 0.0000691 | -0.0034527 | 0.0069137 | TOL=0.00015, SAME ALMOST | TOL=0.015, NOT SAME ALMOST |

| 0.0002338 | -0.0001898 | 0.0233806 | -0.0189759 | TOL=0.00015, SAME ALMOST | TOL=0.015, NOT SAME ALMOST |

| 0.0000106 | -0.0001030 | 0.0010576 | -0.0103028 | TOL=0.00015, SAME ALMOST | TOL=0.015, NOT SAME ALMOST |

| 0.0000194 | -0.0000668 | 0.0019393 | -0.0066849 | TOL=0.00015, SAME ALMOST | TOL=0.015, NOT SAME ALMOST |

2.1.5 String Dataframes

Go back to fan’s REconTools research support package, R4Econ examples page, PkgTestR packaging guide, or Stat4Econ course page.

2.1.5.1 List of Strings to Tibble Datfare

There are several lists of strings, store them as variables in a dataframe.

# Sting data inputs

ls_st_abc <- c('a', 'b', 'c')

ls_st_efg <- c('e', 'f', 'g')

ls_st_opq <- c('o', 'p', 'q')

mt_str = cbind(ls_st_abc, ls_st_efg, ls_st_opq)

# Column Names

ar_st_varnames <- c('id','var1','var2','var3')

# Combine to tibble, add name col1, col2, etc.

tb_st_combine <- as_tibble(mt_str) %>%

rowid_to_column(var = "id") %>%

rename_all(~c(ar_st_varnames))

# Display

kable(tb_st_combine) %>% kable_styling_fc()| id | var1 | var2 | var3 |

|---|---|---|---|

| 1 | a | e | o |

| 2 | b | f | p |

| 3 | c | g | q |

2.1.5.2 Find and Replace

Find and Replace in Dataframe.

# if string value is contained in variable

("bridex.B" %in% (df.reg.out.all$vars_var.y))

# if string value is not contained in variable:

# 1. type is variable name

# 2. Toyota|Mazda are strings to be excluded

filter(mtcars, !grepl('Toyota|Mazda', type))

# filter does not contain string

rs_hgt_prot_log_tidy %>% filter(!str_detect(term, 'prot'))2.2 Counting Observation

2.2.1 Counting and Tabulations

Go back to fan’s REconTools research support package, R4Econ examples page, PkgTestR packaging guide, or Stat4Econ course page.

2.2.1.1 Tabulate Two Categorial Variables

First, we tabulate a dataset, and show categories as rows, and display frequencies.

# We use the mtcars dataset

tb_tab_joint <- mtcars %>%

group_by(gear, am) %>%

tally()

# Display

tb_tab_joint %>%

kable(caption = "cross tabulation, stacked") %>%

kable_styling_fc()| gear | am | n |

|---|---|---|

| 3 | 0 | 15 |

| 4 | 0 | 4 |

| 4 | 1 | 8 |

| 5 | 1 | 5 |

We can present this as cross tabs.

# We use the mtcars dataset

tb_cross_tab <- mtcars %>%

group_by(gear, am) %>%

tally() %>%

spread(am, n)

# Display

tb_cross_tab %>%

kable(caption = "cross tabulation") %>%

kable_styling_fc()| gear | 0 | 1 |

|---|---|---|

| 3 | 15 | NA |

| 4 | 4 | 8 |

| 5 | NA | 5 |

2.2.1.2 Tabulate Once Each Distinct Subgroup

We have two variables variables, am and mpg, the mpg values are not unique. We want to know how many unique mpg levels are there for each am group. We use the dplyr::distinct function to achieve this.

tb_dist_tab <- mtcars %>%

# .keep_all to keep all variables

distinct(am, mpg, .keep_all = TRUE) %>%

group_by(am) %>%

tally()

# Display

tb_dist_tab %>%

kable(caption = "Tabulate distinct groups") %>%

kable_styling_fc()| am | n |

|---|---|

| 0 | 16 |

| 1 | 11 |

2.2.1.3 Expanding to Panel

There are \(N\) individuals, each observed for \(Y_i\) years. We start with a dataframe where individuals are the unit of observation, we expand this to a panel with a row for each of the years that the individual is in the survey for.

Algorithm:

- generate testing frame, the individual attribute dataset with invariant information over panel

- uncount, duplicate rows by years in survey

- group and generate sorted index

- add indiviual specific stat year to index

First, we construct the dataframe where each row is an individual.

# 1. Array of Years in the Survey

ar_years_in_survey <- c(2, 3, 1, 10, 2, 5)

ar_start_yaer <- c(1, 2, 3, 1, 1, 1)

ar_end_year <- c(2, 4, 3, 10, 2, 5)

mt_combine <- cbind(ar_years_in_survey, ar_start_yaer, ar_end_year)

# This is the individual attribute dataset, attributes that are invariant acrosss years

tb_indi_attributes <- as_tibble(mt_combine) %>% rowid_to_column(var = "ID")

# Display

tb_indi_attributes %>%

head(10) %>%

kable() %>%

kable_styling_fc()| ID | ar_years_in_survey | ar_start_yaer | ar_end_year |

|---|---|---|---|

| 1 | 2 | 1 | 2 |

| 2 | 3 | 2 | 4 |

| 3 | 1 | 3 | 3 |

| 4 | 10 | 1 | 10 |

| 5 | 2 | 1 | 2 |

| 6 | 5 | 1 | 5 |

Second, we change the dataframe so that each unit of observation is an individual in an year. This means we will duplicate the information in the prior table, so if an individual appears for 4 years in the survey, we will now have four rows for this individual. We generate a new variable that is the calendar year. This is now a panel dataset.

# 2. Sort and generate variable equal to sorted index

tb_indi_panel <- tb_indi_attributes %>% uncount(ar_years_in_survey)

# 3. Panel now construct exactly which year in survey, note that all needed is sort index

# Note sorting not needed, all rows identical now

tb_indi_panel <- tb_indi_panel %>%

group_by(ID) %>%

mutate(yr_in_survey = row_number())

tb_indi_panel <- tb_indi_panel %>%

mutate(calendar_year = yr_in_survey + ar_start_yaer - 1)

# Show results Head 10

tb_indi_panel %>%

head(10) %>%

kable() %>%

kable_styling_fc()| ID | ar_start_yaer | ar_end_year | yr_in_survey | calendar_year |

|---|---|---|---|---|

| 1 | 1 | 2 | 1 | 1 |

| 1 | 1 | 2 | 2 | 2 |

| 2 | 2 | 4 | 1 | 2 |

| 2 | 2 | 4 | 2 | 3 |

| 2 | 2 | 4 | 3 | 4 |

| 3 | 3 | 3 | 1 | 3 |

| 4 | 1 | 10 | 1 | 1 |

| 4 | 1 | 10 | 2 | 2 |

| 4 | 1 | 10 | 3 | 3 |

| 4 | 1 | 10 | 4 | 4 |

2.3 Sorting, Indexing, Slicing

2.3.1 Sorting

Go back to fan’s REconTools research support package, R4Econ examples page, PkgTestR packaging guide, or Stat4Econ course page.

2.3.1.1 Generate Sorted Index within Group with Repeating Values

There is a variable, sort by this variable, then generate index from 1 to N representing sorted values of this index. If there are repeating values, still assign index, different index each value.

- r generate index sort

- dplyr mutate equals index

# Sort and generate variable equal to sorted index

df_iris <- iris %>% arrange(Sepal.Length) %>%

mutate(Sepal.Len.Index = row_number()) %>%

select(Sepal.Length, Sepal.Len.Index, everything())

# Show results Head 10

df_iris %>% head(10) %>%

kable() %>%

kable_styling_fc_wide()| Sepal.Length | Sepal.Len.Index | Sepal.Width | Petal.Length | Petal.Width | Species |

|---|---|---|---|---|---|

| 4.3 | 1 | 3.0 | 1.1 | 0.1 | setosa |

| 4.4 | 2 | 2.9 | 1.4 | 0.2 | setosa |

| 4.4 | 3 | 3.0 | 1.3 | 0.2 | setosa |

| 4.4 | 4 | 3.2 | 1.3 | 0.2 | setosa |

| 4.5 | 5 | 2.3 | 1.3 | 0.3 | setosa |

| 4.6 | 6 | 3.1 | 1.5 | 0.2 | setosa |

| 4.6 | 7 | 3.4 | 1.4 | 0.3 | setosa |

| 4.6 | 8 | 3.6 | 1.0 | 0.2 | setosa |

| 4.6 | 9 | 3.2 | 1.4 | 0.2 | setosa |

| 4.7 | 10 | 3.2 | 1.3 | 0.2 | setosa |

2.3.1.2 Populate Value from Lowest Index to All other Rows

We would like to calculate for example the ratio of each individual’s highest to the the person with the lowest height in a dataset. We first need to generated sorted index from lowest to highest, and then populate the lowest height to all rows, and then divide.

Search Terms:

- r spread value to all rows from one row

- r other rows equal to the value of one row

- Conditional assignment of one variable to the value of one of two other variables

- dplyr mutate conditional

- dplyr value from one row to all rows

- dplyr mutate equal to value in another cell

Links:

2.3.1.2.1 Short Method: mutate and min

We just want the lowest value to be in its own column, so that we can compute various statistics using the lowest value variable and the original variable.

# 1. Sort

df_iris_m1 <- iris %>% mutate(Sepal.Len.Lowest.all = min(Sepal.Length)) %>%

select(Sepal.Length, Sepal.Len.Lowest.all, everything())

# Show results Head 10

df_iris_m1 %>% head(10) %>%

kable() %>%

kable_styling_fc_wide()| Sepal.Length | Sepal.Len.Lowest.all | Sepal.Width | Petal.Length | Petal.Width | Species |

|---|---|---|---|---|---|

| 5.1 | 4.3 | 3.5 | 1.4 | 0.2 | setosa |

| 4.9 | 4.3 | 3.0 | 1.4 | 0.2 | setosa |

| 4.7 | 4.3 | 3.2 | 1.3 | 0.2 | setosa |

| 4.6 | 4.3 | 3.1 | 1.5 | 0.2 | setosa |

| 5.0 | 4.3 | 3.6 | 1.4 | 0.2 | setosa |

| 5.4 | 4.3 | 3.9 | 1.7 | 0.4 | setosa |

| 4.6 | 4.3 | 3.4 | 1.4 | 0.3 | setosa |

| 5.0 | 4.3 | 3.4 | 1.5 | 0.2 | setosa |

| 4.4 | 4.3 | 2.9 | 1.4 | 0.2 | setosa |

| 4.9 | 4.3 | 3.1 | 1.5 | 0.1 | setosa |

2.3.1.2.2 Long Method: row_number and case_when

This is the long method, using row_number, and case_when. The benefit of this method is that it generates several intermediate variables that might be useful. And the key final step is to set a new variable (A=Sepal.Len.Lowest.all) equal to another variable’s (B=Sepal.Length’s) value at the index that satisfies condition based a third variable (C=Sepal.Len.Index).

# 1. Sort

# 2. generate index

# 3. value at lowest index (case_when)

# 4. spread value from lowest index to other rows

# Note step 4 does not require step 3

df_iris_m2 <- iris %>% arrange(Sepal.Length) %>%

mutate(Sepal.Len.Index = row_number()) %>%

mutate(Sepal.Len.Lowest.one =

case_when(row_number()==1 ~ Sepal.Length)) %>%

mutate(Sepal.Len.Lowest.all =

Sepal.Length[Sepal.Len.Index==1]) %>%

select(Sepal.Length, Sepal.Len.Index,

Sepal.Len.Lowest.one, Sepal.Len.Lowest.all)

# Show results Head 10

df_iris_m2 %>% head(10) %>%

kable() %>%

kable_styling_fc_wide()| Sepal.Length | Sepal.Len.Index | Sepal.Len.Lowest.one | Sepal.Len.Lowest.all |

|---|---|---|---|

| 4.3 | 1 | 4.3 | 4.3 |

| 4.4 | 2 | NA | 4.3 |

| 4.4 | 3 | NA | 4.3 |

| 4.4 | 4 | NA | 4.3 |

| 4.5 | 5 | NA | 4.3 |

| 4.6 | 6 | NA | 4.3 |

| 4.6 | 7 | NA | 4.3 |

| 4.6 | 8 | NA | 4.3 |

| 4.6 | 9 | NA | 4.3 |

| 4.7 | 10 | NA | 4.3 |

2.3.1.3 Generate Sorted Index based on Deviations

Generate Positive and Negative Index based on Ordered Deviation from some Number.

There is a variable that is continuous, substract a number from this variable, and generate index based on deviations. Think of the index as generating intervals indicating where the value lies. 0th index indicates the largest value in sequence that is smaller than or equal to number \(x\), 1st index indicates the smallest value in sequence that is larger than number \(x\).

The solution below is a little bit convoluated and long, there is likely a much quicker way. The process below shows various intermediary outputs that help arrive at deviation index Sepal.Len.Devi.Index from initial sorted index Sepal.Len.Index.

search:

- dplyr arrange ignore na

- dplyr index deviation from order number sequence

- dplyr index below above

- dplyr index order below above value

# 1. Sort and generate variable equal to sorted index

# 2. Plus or minus deviations from some value

# 3. Find the zero, which means, the number closests to zero including zero from the negative side

# 4. Find the index at the highest zero and below deviation point

# 5. Difference of zero index and original sorted index

sc_val_x <- 4.65

df_iris_deviate <- iris %>% arrange(Sepal.Length) %>%

mutate(Sepal.Len.Index = row_number()) %>%

mutate(Sepal.Len.Devi = (Sepal.Length - sc_val_x)) %>%

mutate(Sepal.Len.Devi.Neg =

case_when(Sepal.Len.Devi <= 0 ~ (-1)*(Sepal.Len.Devi))) %>%

arrange((Sepal.Len.Devi.Neg), desc(Sepal.Len.Index)) %>%

mutate(Sepal.Len.Index.Zero =

case_when(row_number() == 1 ~ Sepal.Len.Index)) %>%

mutate(Sepal.Len.Devi.Index =

Sepal.Len.Index - Sepal.Len.Index.Zero[row_number() == 1]) %>%

arrange(Sepal.Len.Index) %>%

select(Sepal.Length, Sepal.Len.Index, Sepal.Len.Devi,

Sepal.Len.Devi.Neg, Sepal.Len.Index.Zero, Sepal.Len.Devi.Index)

# Show results Head 10

df_iris_deviate %>% head(20) %>%

kable() %>%

kable_styling_fc_wide()| Sepal.Length | Sepal.Len.Index | Sepal.Len.Devi | Sepal.Len.Devi.Neg | Sepal.Len.Index.Zero | Sepal.Len.Devi.Index |

|---|---|---|---|---|---|

| 4.3 | 1 | -0.35 | 0.35 | NA | -8 |

| 4.4 | 2 | -0.25 | 0.25 | NA | -7 |

| 4.4 | 3 | -0.25 | 0.25 | NA | -6 |

| 4.4 | 4 | -0.25 | 0.25 | NA | -5 |

| 4.5 | 5 | -0.15 | 0.15 | NA | -4 |

| 4.6 | 6 | -0.05 | 0.05 | NA | -3 |

| 4.6 | 7 | -0.05 | 0.05 | NA | -2 |

| 4.6 | 8 | -0.05 | 0.05 | NA | -1 |

| 4.6 | 9 | -0.05 | 0.05 | 9 | 0 |

| 4.7 | 10 | 0.05 | NA | NA | 1 |

| 4.7 | 11 | 0.05 | NA | NA | 2 |

| 4.8 | 12 | 0.15 | NA | NA | 3 |

| 4.8 | 13 | 0.15 | NA | NA | 4 |

| 4.8 | 14 | 0.15 | NA | NA | 5 |

| 4.8 | 15 | 0.15 | NA | NA | 6 |

| 4.8 | 16 | 0.15 | NA | NA | 7 |

| 4.9 | 17 | 0.25 | NA | NA | 8 |

| 4.9 | 18 | 0.25 | NA | NA | 9 |

| 4.9 | 19 | 0.25 | NA | NA | 10 |

| 4.9 | 20 | 0.25 | NA | NA | 11 |

2.3.2 Group, Sort and Slice

Go back to fan’s REconTools research support package, R4Econ examples page, PkgTestR packaging guide, or Stat4Econ course page.

2.3.2.1 Sort in Ascending and Descending Orders

We sort the mtcars dataset, sorting in ascending order by cyl, and in descending order by mpg. Using arrange, desc(disp) means sorting the disp variable in descending order. In the table shown below, cyc is increasing, and disp id decreasing within each cyc group.

kable(mtcars %>%

arrange(cyl, desc(disp)) %>%

# Select and filter to reduce display clutter

select(cyl, disp, mpg)) %>%

kable_styling_fc()| cyl | disp | mpg | |

|---|---|---|---|

| Merc 240D | 4 | 146.7 | 24.4 |

| Merc 230 | 4 | 140.8 | 22.8 |

| Volvo 142E | 4 | 121.0 | 21.4 |

| Porsche 914-2 | 4 | 120.3 | 26.0 |

| Toyota Corona | 4 | 120.1 | 21.5 |

| Datsun 710 | 4 | 108.0 | 22.8 |

| Lotus Europa | 4 | 95.1 | 30.4 |

| Fiat X1-9 | 4 | 79.0 | 27.3 |

| Fiat 128 | 4 | 78.7 | 32.4 |

| Honda Civic | 4 | 75.7 | 30.4 |

| Toyota Corolla | 4 | 71.1 | 33.9 |

| Hornet 4 Drive | 6 | 258.0 | 21.4 |

| Valiant | 6 | 225.0 | 18.1 |

| Merc 280 | 6 | 167.6 | 19.2 |

| Merc 280C | 6 | 167.6 | 17.8 |

| Mazda RX4 | 6 | 160.0 | 21.0 |

| Mazda RX4 Wag | 6 | 160.0 | 21.0 |

| Ferrari Dino | 6 | 145.0 | 19.7 |

| Cadillac Fleetwood | 8 | 472.0 | 10.4 |

| Lincoln Continental | 8 | 460.0 | 10.4 |

| Chrysler Imperial | 8 | 440.0 | 14.7 |

| Pontiac Firebird | 8 | 400.0 | 19.2 |

| Hornet Sportabout | 8 | 360.0 | 18.7 |

| Duster 360 | 8 | 360.0 | 14.3 |

| Ford Pantera L | 8 | 351.0 | 15.8 |

| Camaro Z28 | 8 | 350.0 | 13.3 |

| Dodge Challenger | 8 | 318.0 | 15.5 |

| AMC Javelin | 8 | 304.0 | 15.2 |

| Maserati Bora | 8 | 301.0 | 15.0 |

| Merc 450SE | 8 | 275.8 | 16.4 |

| Merc 450SL | 8 | 275.8 | 17.3 |

| Merc 450SLC | 8 | 275.8 | 15.2 |

2.3.2.2 Get Highest Values from Groups

There is a dataframe with a grouping variable with N unique values, for example N classes. Find the top three highest scoring students from each class. In the example below, group by cyl and get the cars with the highest and second highest mpg cars in each cyl group.

# use mtcars: slice_head gets the lowest sorted value

df_groupby_top_mpg <- mtcars %>%

rownames_to_column(var = "car") %>%

arrange(cyl, desc(mpg)) %>%

group_by(cyl) %>%

slice_head(n=3) %>%

select(car, cyl, mpg, disp, hp)

# display

kable(df_groupby_top_mpg) %>% kable_styling_fc()| car | cyl | mpg | disp | hp |

|---|---|---|---|---|

| Toyota Corolla | 4 | 33.9 | 71.1 | 65 |

| Fiat 128 | 4 | 32.4 | 78.7 | 66 |

| Honda Civic | 4 | 30.4 | 75.7 | 52 |

| Hornet 4 Drive | 6 | 21.4 | 258.0 | 110 |

| Mazda RX4 | 6 | 21.0 | 160.0 | 110 |

| Mazda RX4 Wag | 6 | 21.0 | 160.0 | 110 |

| Pontiac Firebird | 8 | 19.2 | 400.0 | 175 |

| Hornet Sportabout | 8 | 18.7 | 360.0 | 175 |

| Merc 450SL | 8 | 17.3 | 275.8 | 180 |

2.3.2.3 Differences in Within-group Sorted Value

We first take the largest N values in M groups, then we difference between the ranked top values in each group.

We have N classes, and M students in each class. We first select the 3 students with the highest scores from each class, then we take the difference between 1st and 2nd, and the difference between the 2nd and the 3rd students.

Note that when are using descending sort, so lead means the next value in descending sequencing, and lag means the last value which was higher in descending order.

# We use what we just created in the last block.

df_groupby_top_mpg_diff <- df_groupby_top_mpg %>%

group_by(cyl) %>%

mutate(mpg_diff_higher_minus_lower = mpg - lead(mpg)) %>%

mutate(mpg_diff_lower_minus_higher = mpg - lag(mpg))

# display

kable(df_groupby_top_mpg_diff) %>% kable_styling_fc()| car | cyl | mpg | disp | hp | mpg_diff_higher_minus_lower | mpg_diff_lower_minus_higher |

|---|---|---|---|---|---|---|

| Toyota Corolla | 4 | 33.9 | 71.1 | 65 | 1.5 | NA |

| Fiat 128 | 4 | 32.4 | 78.7 | 66 | 2.0 | -1.5 |

| Honda Civic | 4 | 30.4 | 75.7 | 52 | NA | -2.0 |

| Hornet 4 Drive | 6 | 21.4 | 258.0 | 110 | 0.4 | NA |

| Mazda RX4 | 6 | 21.0 | 160.0 | 110 | 0.0 | -0.4 |

| Mazda RX4 Wag | 6 | 21.0 | 160.0 | 110 | NA | 0.0 |

| Pontiac Firebird | 8 | 19.2 | 400.0 | 175 | 0.5 | NA |

| Hornet Sportabout | 8 | 18.7 | 360.0 | 175 | 1.4 | -0.5 |

| Merc 450SL | 8 | 17.3 | 275.8 | 180 | NA | -1.4 |

2.4 Advanced Group Aggregation

2.4.1 Cumulative Statistics within Group

Go back to fan’s REconTools research support package, R4Econ examples page, PkgTestR packaging guide, or Stat4Econ course page.

2.4.1.1 Cumulative Mean

There is a dataset where there are different types of individuals, perhaps household size, that is the grouping variable. Within each group, we compute the incremental marginal propensity to consume for each additional check. We now also want to know the average propensity to consume up to each check considering all allocated checks. We needed to calculatet this for Nygaard, Sørensen and Wang (2021). This can be dealt with by using the cumall function.

Use the df_hgt_wgt as the testing dataset. In the example below, group by individual id, sort by survey month, and cumulative mean over the protein variable.

In the protein example

First select the testing dataset and variables.

# Load the REconTools Dataset df_hgt_wgt

data("df_hgt_wgt")

# str(df_hgt_wgt)

# Select several rows

df_hgt_wgt_sel <- df_hgt_wgt %>%

filter(S.country == "Cebu") %>%

select(indi.id, svymthRound, prot)Second, arrange, groupby, and cumulative mean. The protein variable is protein for each survey month, from month 2 to higher as babies grow. The protein intake observed is increasing quickly, hence, the cumulative mean is lower than the observed value for the survey month of the baby.

# Group by indi.id and sort by protein

df_hgt_wgt_sel_cummean <- df_hgt_wgt_sel %>%

arrange(indi.id, svymthRound) %>%

group_by(indi.id) %>%

mutate(prot_cummean = cummean(prot))

# display results

REconTools::ff_summ_percentiles(df_hgt_wgt_sel_cummean)

# display results

df_hgt_wgt_sel_cummean %>% filter(indi.id %in% c(17, 18)) %>%

kable() %>% kable_styling_fc()| indi.id | svymthRound | prot | prot_cummean |

|---|---|---|---|

| 17 | 0 | 0.5 | 0.5000000 |

| 17 | 2 | 0.7 | 0.6000000 |

| 17 | 4 | 0.5 | 0.5666667 |

| 17 | 6 | 0.5 | 0.5500000 |

| 17 | 8 | 6.1 | 1.6600000 |

| 17 | 10 | 5.0 | 2.2166667 |

| 17 | 12 | 6.4 | 2.8142857 |

| 17 | 14 | 20.1 | 4.9750000 |

| 17 | 16 | 20.1 | 6.6555556 |

| 17 | 18 | 23.0 | 8.2900000 |

| 17 | 20 | 24.9 | 9.8000000 |

| 17 | 22 | 20.1 | 10.6583333 |

| 17 | 24 | 10.1 | 10.6153846 |

| 17 | 102 | NA | NA |

| 17 | 138 | NA | NA |

| 17 | 187 | NA | NA |

| 17 | 224 | NA | NA |

| 17 | 258 | NA | NA |

| 18 | 0 | 1.2 | 1.2000000 |

| 18 | 2 | 4.7 | 2.9500000 |

| 18 | 4 | 17.2 | 7.7000000 |

| 18 | 6 | 18.6 | 10.4250000 |

| 18 | 8 | NA | NA |

| 18 | 10 | 16.8 | NA |

| 18 | 12 | NA | NA |

| 18 | 14 | NA | NA |

| 18 | 16 | NA | NA |

| 18 | 18 | NA | NA |

| 18 | 20 | NA | NA |

| 18 | 22 | 15.7 | NA |

| 18 | 24 | 22.5 | NA |

| 18 | 102 | NA | NA |

| 18 | 138 | NA | NA |

| 18 | 187 | NA | NA |

| 18 | 224 | NA | NA |

| 18 | 258 | NA | NA |

Third, in the basic implementation above, if an incremental month has NA, no values computed at that point or after. This is the case for individual 18 above. To ignore NA, we have, from this. Note how results for individual 18 changes.

# https://stackoverflow.com/a/49906718/8280804

# Group by indi.id and sort by protein

df_hgt_wgt_sel_cummean_noNA <- df_hgt_wgt_sel %>%

arrange(indi.id, svymthRound) %>%

group_by(indi.id, isna = is.na(prot)) %>%

mutate(prot_cummean = ifelse(isna, NA, cummean(prot)))

# display results

df_hgt_wgt_sel_cummean_noNA %>% filter(indi.id %in% c(17, 18)) %>%

kable() %>% kable_styling_fc()| indi.id | svymthRound | prot | isna | prot_cummean |

|---|---|---|---|---|

| 17 | 0 | 0.5 | FALSE | 0.5000000 |

| 17 | 2 | 0.7 | FALSE | 0.6000000 |

| 17 | 4 | 0.5 | FALSE | 0.5666667 |

| 17 | 6 | 0.5 | FALSE | 0.5500000 |

| 17 | 8 | 6.1 | FALSE | 1.6600000 |

| 17 | 10 | 5.0 | FALSE | 2.2166667 |

| 17 | 12 | 6.4 | FALSE | 2.8142857 |

| 17 | 14 | 20.1 | FALSE | 4.9750000 |

| 17 | 16 | 20.1 | FALSE | 6.6555556 |

| 17 | 18 | 23.0 | FALSE | 8.2900000 |

| 17 | 20 | 24.9 | FALSE | 9.8000000 |

| 17 | 22 | 20.1 | FALSE | 10.6583333 |

| 17 | 24 | 10.1 | FALSE | 10.6153846 |

| 17 | 102 | NA | TRUE | NA |

| 17 | 138 | NA | TRUE | NA |

| 17 | 187 | NA | TRUE | NA |

| 17 | 224 | NA | TRUE | NA |

| 17 | 258 | NA | TRUE | NA |

| 18 | 0 | 1.2 | FALSE | 1.2000000 |

| 18 | 2 | 4.7 | FALSE | 2.9500000 |

| 18 | 4 | 17.2 | FALSE | 7.7000000 |

| 18 | 6 | 18.6 | FALSE | 10.4250000 |

| 18 | 8 | NA | TRUE | NA |

| 18 | 10 | 16.8 | FALSE | 11.7000000 |

| 18 | 12 | NA | TRUE | NA |

| 18 | 14 | NA | TRUE | NA |

| 18 | 16 | NA | TRUE | NA |

| 18 | 18 | NA | TRUE | NA |

| 18 | 20 | NA | TRUE | NA |

| 18 | 22 | 15.7 | FALSE | 12.3666667 |

| 18 | 24 | 22.5 | FALSE | 13.8142857 |

| 18 | 102 | NA | TRUE | NA |

| 18 | 138 | NA | TRUE | NA |

| 18 | 187 | NA | TRUE | NA |

| 18 | 224 | NA | TRUE | NA |

| 18 | 258 | NA | TRUE | NA |

2.4.2 Groups Statistics

Go back to fan’s REconTools research support package, R4Econ examples page, PkgTestR packaging guide, or Stat4Econ course page.

2.4.2.1 Aggrgate Groups only Unique Group and Count

There are two variables that are numeric, we want to find all the unique groups of these two variables in a dataset and count how many times each unique group occurs

- r unique occurrence of numeric groups

- How to add count of unique values by group to R data.frame

# Numeric value combinations unique Groups

vars.group <- c('hgt0', 'wgt0')

# dataset subsetting

df_use <- df_hgt_wgt %>% select(!!!syms(c(vars.group))) %>%

mutate(hgt0 = round(hgt0/5)*5, wgt0 = round(wgt0/2000)*2000) %>%

drop_na()

# Group, count and generate means for each numeric variables

# mutate_at(vars.group, funs(as.factor(.))) %>%

df.group.count <- df_use %>% group_by(!!!syms(vars.group)) %>%

arrange(!!!syms(vars.group)) %>%

summarise(n_obs_group=n())

# Show results Head 10

df.group.count %>% kable() %>% kable_styling_fc()| hgt0 | wgt0 | n_obs_group |

|---|---|---|

| 40 | 2000 | 122 |

| 45 | 2000 | 4586 |

| 45 | 4000 | 470 |

| 50 | 2000 | 9691 |

| 50 | 4000 | 13106 |

| 55 | 2000 | 126 |

| 55 | 4000 | 1900 |

| 60 | 6000 | 18 |

2.4.2.2 Aggrgate Groups only Unique Group Show up With Means

Several variables that are grouping identifiers. Several variables that are values which mean be unique for each group members. For example, a Panel of income for N households over T years with also household education information that is invariant over time. Want to generate a dataset where the unit of observation are households, rather than household years. Take average of all numeric variables that are household and year specific.

A complicating factor potentially is that the number of observations differ within group, for example, income might be observed for all years for some households but not for other households.

- r dplyr aggregate group average

- Aggregating and analyzing data with dplyr

- column can’t be modified because it is a grouping variable

- see also: Aggregating and analyzing data with dplyr

# In the df_hgt_wgt from R4Econ, there is a country id, village id,

# and individual id, and various other statistics

vars.group <- c('S.country', 'vil.id', 'indi.id')

vars.values <- c('hgt', 'momEdu')

# dataset subsetting

df_use <- df_hgt_wgt %>% select(!!!syms(c(vars.group, vars.values)))

# Group, count and generate means for each numeric variables

df.group <- df_use %>% group_by(!!!syms(vars.group)) %>%

arrange(!!!syms(vars.group)) %>%

summarise_if(is.numeric,

funs(mean = mean(., na.rm = TRUE),

sd = sd(., na.rm = TRUE),

n = sum(is.na(.)==0)))

# Show results Head 10

df.group %>% head(10) %>%

kable() %>%

kable_styling_fc_wide()| S.country | vil.id | indi.id | hgt_mean | momEdu_mean | hgt_sd | momEdu_sd | hgt_n | momEdu_n |

|---|---|---|---|---|---|---|---|---|

| Cebu | 1 | 1 | 61.80000 | 5.3 | 9.520504 | 0 | 7 | 18 |

| Cebu | 1 | 2 | 68.86154 | 7.1 | 9.058931 | 0 | 13 | 18 |

| Cebu | 1 | 3 | 80.45882 | 9.4 | 29.894231 | 0 | 17 | 18 |

| Cebu | 1 | 4 | 88.10000 | 13.9 | 35.533166 | 0 | 18 | 18 |

| Cebu | 1 | 5 | 97.70556 | 11.3 | 41.090366 | 0 | 18 | 18 |

| Cebu | 1 | 6 | 87.49444 | 7.3 | 35.586439 | 0 | 18 | 18 |

| Cebu | 1 | 7 | 90.79412 | 10.4 | 38.722385 | 0 | 17 | 18 |

| Cebu | 1 | 8 | 68.45385 | 13.5 | 10.011961 | 0 | 13 | 18 |

| Cebu | 1 | 9 | 86.21111 | 10.4 | 35.126057 | 0 | 18 | 18 |

| Cebu | 1 | 10 | 87.67222 | 10.5 | 36.508127 | 0 | 18 | 18 |

| S.country | vil.id | indi.id | hgt_mean | momEdu_mean | hgt_sd | momEdu_sd | hgt_n | momEdu_n |

|---|---|---|---|---|---|---|---|---|

| Guatemala | 14 | 2014 | 66.97000 | NaN | 8.967974 | NA | 10 | 0 |

| Guatemala | 14 | 2015 | 71.71818 | NaN | 11.399984 | NA | 11 | 0 |

| Guatemala | 14 | 2016 | 66.33000 | NaN | 9.490352 | NA | 10 | 0 |

| Guatemala | 14 | 2017 | 76.40769 | NaN | 14.827871 | NA | 13 | 0 |

| Guatemala | 14 | 2018 | 74.55385 | NaN | 12.707846 | NA | 13 | 0 |

| Guatemala | 14 | 2019 | 70.47500 | NaN | 11.797390 | NA | 12 | 0 |

| Guatemala | 14 | 2020 | 60.28750 | NaN | 7.060036 | NA | 8 | 0 |

| Guatemala | 14 | 2021 | 84.96000 | NaN | 15.446193 | NA | 10 | 0 |

| Guatemala | 14 | 2022 | 79.38667 | NaN | 15.824749 | NA | 15 | 0 |

| Guatemala | 14 | 2023 | 66.50000 | NaN | 8.613113 | NA | 8 | 0 |

2.4.3 One Variable Group Summary

Go back to fan’s REconTools research support package, R4Econ examples page, PkgTestR packaging guide, or Stat4Econ course page.

There is a categorical variable (based on one or the interaction of multiple variables), there is a continuous variable, obtain statistics for the continuous variable conditional on the categorical variable, but also unconditionally.

Store results in a matrix, but also flatten results wide to row with appropriate keys/variable-names for all group statistics.

Pick which statistics to be included in final wide row

2.4.3.1 Build Program

# Single Variable Group Statistics (also generate overall statistics)

ff_summ_by_group_summ_one <- function(

df, vars.group, var.numeric, str.stats.group = 'main',

str.stats.specify = NULL, boo.overall.stats = TRUE){

# List of statistics

# https://rdrr.io/cran/dplyr/man/summarise.html

strs.center <- c('mean', 'median')

strs.spread <- c('sd', 'IQR', 'mad')

strs.range <- c('min', 'max')

strs.pos <- c('first', 'last')

strs.count <- c('n_distinct')

# Grouping of Statistics

if (missing(str.stats.specify)) {

if (str.stats.group == 'main') {

strs.all <- c('mean', 'min', 'max', 'sd')

}

if (str.stats.group == 'all') {

strs.all <- c(strs.center, strs.spread, strs.range, strs.pos, strs.count)

}

} else {

strs.all <- str.stats.specify

}

# Start Transform

df <- df %>% drop_na() %>%

mutate(!!(var.numeric) := as.numeric(!!sym(var.numeric)))

# Overall Statistics

if (boo.overall.stats) {

df.overall.stats <- df %>%

summarize_at(vars(var.numeric), funs(!!!strs.all))

if (length(strs.all) == 1) {

# give it a name, otherwise if only one stat, name of stat not saved

df.overall.stats <- df.overall.stats %>%

rename(!!strs.all := !!sym(var.numeric))

}

names(df.overall.stats) <-

paste0(var.numeric, '.', names(df.overall.stats))

}

# Group Sort

df.select <- df %>%

group_by(!!!syms(vars.group)) %>%

arrange(!!!syms(c(vars.group, var.numeric)))

# Table of Statistics

df.table.grp.stats <- df.select %>%

summarize_at(vars(var.numeric), funs(!!!strs.all))

# Add Stat Name

if (length(strs.all) == 1) {

# give it a name, otherwise if only one stat, name of stat not saved

df.table.grp.stats <- df.table.grp.stats %>%

rename(!!strs.all := !!sym(var.numeric))

}

# Row of Statistics

str.vars.group.combine <- paste0(vars.group, collapse='_')

if (length(vars.group) == 1) {

df.row.grp.stats <- df.table.grp.stats %>%

mutate(!!(str.vars.group.combine) :=

paste0(var.numeric, '.',

vars.group, '.g',

(!!!syms(vars.group)))) %>%

gather(variable, value, -one_of(vars.group)) %>%

unite(str.vars.group.combine, c(str.vars.group.combine, 'variable')) %>%

spread(str.vars.group.combine, value)

} else {

df.row.grp.stats <- df.table.grp.stats %>%

mutate(vars.groups.combine :=

paste0(paste0(vars.group, collapse='.')),

!!(str.vars.group.combine) :=

paste0(interaction(!!!(syms(vars.group))))) %>%

mutate(!!(str.vars.group.combine) :=

paste0(var.numeric, '.', vars.groups.combine, '.',

(!!sym(str.vars.group.combine)))) %>%

ungroup() %>%

select(-vars.groups.combine, -one_of(vars.group)) %>%

gather(variable, value, -one_of(str.vars.group.combine)) %>%

unite(str.vars.group.combine, c(str.vars.group.combine, 'variable')) %>%

spread(str.vars.group.combine, value)

}

# Clean up name strings

names(df.table.grp.stats) <-

gsub(x = names(df.table.grp.stats),pattern = "_", replacement = "\\.")

names(df.row.grp.stats) <-

gsub(x = names(df.row.grp.stats),pattern = "_", replacement = "\\.")

# Return

list.return <-

list(df_table_grp_stats = df.table.grp.stats,

df_row_grp_stats = df.row.grp.stats)

# Overall Statistics, without grouping

if (boo.overall.stats) {

df.row.stats.all <- c(df.row.grp.stats, df.overall.stats)

list.return <- append(list.return,

list(df_overall_stats = df.overall.stats,

df_row_stats_all = df.row.stats.all))

}

# Return

return(list.return)

}2.4.3.2 Test

Load data and test

# Library

library(tidyverse)

# Load Sample Data

setwd('C:/Users/fan/R4Econ/_data/')

df <- read_csv('height_weight.csv')2.4.3.2.1 Function Testing By Gender Groups

Need two variables, a group variable that is a factor, and a numeric

Main Statistics:

# Single Variable Group Statistics

ff_summ_by_group_summ_one(

df.select, vars.group = vars.group, var.numeric = var.numeric,

str.stats.group = 'main')$df_table_grp_statsSpecify Two Specific Statistics:

ff_summ_by_group_summ_one(

df.select, vars.group = vars.group, var.numeric = var.numeric,

str.stats.specify = c('mean', 'sd'))$df_table_grp_statsSpecify One Specific Statistics:

2.4.3.2.2 Function Testing By Country and Gender Groups

Need two variables, a group variable that is a factor, and a numeric. Now joint grouping variables.

Main Statistics:

ff_summ_by_group_summ_one(

df.select, vars.group = vars.group, var.numeric = var.numeric,

str.stats.group = 'main')$df_table_grp_statsSpecify Two Specific Statistics:

ff_summ_by_group_summ_one(

df.select, vars.group = vars.group, var.numeric = var.numeric,

str.stats.specify = c('mean', 'sd'))$df_table_grp_statsSpecify One Specific Statistics:

2.4.4 Nested within Group Stats

Go back to fan’s REconTools research support package, R4Econ examples page, PkgTestR packaging guide, or Stat4Econ course page.

By Multiple within Individual Groups Variables, Averages for All Numeric Variables within All Groups of All Group Variables (Long to very Wide). Suppose you have an individual level final outcome. The individual is observed for N periods, where each period the inputs differ. What inputs impacted the final outcome?

Suppose we can divide N periods in which the individual is in the data into a number of years, a number of semi-years, a number of quarters, or uneven-staggered lengths. We might want to generate averages across individuals and within each of these different possible groups averages of inputs.

Then we want to version of the data where each row is an individual, one of the variables is the final outcome, and the other variables are these different averages: averages for the 1st, 2nd, 3rd year in which indivdiual is in data, averages for 1st, …, final quarter in which indivdiual is in data.

2.4.4.1 Build Function

This function takes as inputs:

- vars.not.groups2avg: a list of variables that are not the within-indivdiual or across-individual grouping variables, but the variables we want to average over. Withnin indivdiual grouping averages will be calculated for these variables using the not-listed variables as within indivdiual groups (excluding vars.indi.grp groups).

- vars.indi.grp: a list or individual variables, and also perhaps villages, province, etc id variables that are higher than individual ID. Note the groups are are ACROSS individual higher level group variables.

- the remaining variables are all within individual grouping variables.

the function output is a dataframe:

- each row is an individual

- initial variables individual ID and across individual groups from vars.indi.grp.

- other variables are all averages for the variables in vars.not.groups2avg

- if there are 2 within individual group variables, and the first has 3 groups (years), the second has 6 groups (semi-years), then there would be 9 average variables.

- each average variables has the original variable name from vars.not.groups2avg plus the name of the within individual grouping variable, and at the end ‘c_x’, where x is a integer representing the category within the group (if 3 years, x=1, 2, 3)

# Data Function

# https://fanwangecon.github.io/R4Econ/summarize/summ/ByGroupsSummWide.html

f.by.groups.summ.wide <- function(df.groups.to.average,

vars.not.groups2avg,

vars.indi.grp = c('S.country','ID'),

display=TRUE) {

# 1. generate categoricals for full year (m.12), half year (m.6), quarter year (m.4)

# 2. generate categoricals also for uneven years (m12t14) using

# stagger (+2 rather than -1)

# 3. reshape wide to long, so that all categorical date groups appear in var=value,

# and categories in var=variable

# 4. calculate mean for all numeric variables for all date groups

# 5. combine date categorical variable and value, single var:

# m.12.c1= first year average from m.12 averaging

######## ######## ######## ######## #######

# Step 1

######## ######## ######## ######## #######

# 1. generate categoricals for full year (m.12), half year (m.6), quarter year (m.4)

# 2. generate categoricals also for uneven years (m12t14) using stagger

# (+2 rather than -1)

######## ######## ######## ######## #######

# S2: reshape wide to long, so that all categorical date groups appear in var=value,

# and categories in var=variable; calculate mean for all

# numeric variables for all date groups

######## ######## ######## ######## #######

df.avg.long <- df.groups.to.average %>%

gather(variable, value, -one_of(c(vars.indi.grp,

vars.not.groups2avg))) %>%

group_by(!!!syms(vars.indi.grp), variable, value) %>%

summarise_if(is.numeric, funs(mean(., na.rm = TRUE)))

if (display){

dim(df.avg.long)

options(repr.matrix.max.rows=10, repr.matrix.max.cols=20)

print(df.avg.long)

}

######## ######## ######## ######## #######

# S3 combine date categorical variable and value, single var:

# m.12.c1= first year average from m.12 averaging; to do this make

# data even longer first

######## ######## ######## ######## #######

# We already have the averages, but we want them to show up as variables,

# mean for each group of each variable.

df.avg.allvars.wide <- df.avg.long %>%

ungroup() %>%

mutate(all_m_cate = paste0(variable, '_c', value)) %>%

select(all_m_cate, everything(), -variable, -value) %>%

gather(variable, value, -one_of(vars.indi.grp), -all_m_cate) %>%

unite('var_mcate', variable, all_m_cate) %>%

spread(var_mcate, value)

if (display){

dim(df.avg.allvars.wide)

options(repr.matrix.max.rows=10, repr.matrix.max.cols=10)

print(df.avg.allvars.wide)

}

return(df.avg.allvars.wide)

}2.4.4.2 Test Program

In our sample dataset, the number of nutrition/height/income etc information observed within each country and month of age group are different. We have a panel dataset for children observed over different months of age.

We have two key grouping variables: 1. country: data are observed for guatemala and cebu 2. month-age (survey month round=svymthRound): different months of age at which each individual child is observed

A child could be observed for many months, or just a few months. A child’s height information could be observed for more months-of-age than nutritional intake information. We eventually want to run regressions where the outcome is height/weight and the input is nutrition. The regressions will be at the month-of-age level. We need to know how many times different variables are observed at the month-of-age level.

# Library

library(tidyverse)

# Load Sample Data

setwd('C:/Users/fan/R4Econ/_data/')

df <- read_csv('height_weight.csv')2.4.4.2.1 Generate Within Individual Groups

In the data, children are observed for different number of months since birth. We want to calculate quarterly, semi-year, annual, etc average nutritional intakes. First generate these within-individual grouping variables. We can also generate uneven-staggered calendar groups as shown below.

mth.var <- 'svymthRound'

df.groups.to.average<- df %>%

filter(!!sym(mth.var) >= 0 & !!sym(mth.var) <= 24) %>%

mutate(m12t24=(floor((!!sym(mth.var) - 12) %/% 14) + 1),

m8t24=(floor((!!sym(mth.var) - 8) %/% 18) + 1),

m12 = pmax((floor((!!sym(mth.var)-1) %/% 12) + 1), 1),

m6 = pmax((floor((!!sym(mth.var)-1) %/% 6) + 1), 1),

m3 = pmax((floor((!!sym(mth.var)-1) %/% 3) + 1), 1))# Show Results

options(repr.matrix.max.rows=30, repr.matrix.max.cols=20)

vars.arrange <- c('S.country','indi.id','svymthRound')

vars.groups.within.indi <- c('m12t24', 'm8t24', 'm12', 'm6', 'm3')

as.tibble(df.groups.to.average %>%

group_by(!!!syms(vars.arrange)) %>%

arrange(!!!syms(vars.arrange)) %>%

select(!!!syms(vars.arrange), !!!syms(vars.groups.within.indi)))2.4.4.2.2 Within Group Averages

With the within-group averages created, we can generate averages for all variables within these groups.

vars.not.groups2avg <- c('prot', 'cal')

vars.indi.grp <- c('S.country', 'indi.id')

vars.groups.within.indi <- c('m12t24', 'm8t24', 'm12', 'm6', 'm3')

df.groups.to.average.select <- df.groups.to.average %>%

select(one_of(c(vars.indi.grp,

vars.not.groups2avg,

vars.groups.within.indi)))

df.avg.allvars.wide <- f.by.groups.summ.wide(df.groups.to.average.select,

vars.not.groups2avg,

vars.indi.grp, display=FALSE)This is the tabular version of results

## [1] 2023 38## [1] "S.country" "indi.id" "cal_m12_c1" "cal_m12_c2"

## [5] "cal_m12t24_c0" "cal_m12t24_c1" "cal_m3_c1" "cal_m3_c2"

## [9] "cal_m3_c3" "cal_m3_c4" "cal_m3_c5" "cal_m3_c6"

## [13] "cal_m3_c7" "cal_m3_c8" "cal_m6_c1" "cal_m6_c2"

## [17] "cal_m6_c3" "cal_m6_c4" "cal_m8t24_c0" "cal_m8t24_c1"

## [21] "prot_m12_c1" "prot_m12_c2" "prot_m12t24_c0" "prot_m12t24_c1"

## [25] "prot_m3_c1" "prot_m3_c2" "prot_m3_c3" "prot_m3_c4"

## [29] "prot_m3_c5" "prot_m3_c6" "prot_m3_c7" "prot_m3_c8"

## [33] "prot_m6_c1" "prot_m6_c2" "prot_m6_c3" "prot_m6_c4"

## [37] "prot_m8t24_c0" "prot_m8t24_c1"| S.country | indi.id | cal_m12_c1 | cal_m12_c2 | cal_m12t24_c0 | cal_m12t24_c1 | cal_m3_c1 | cal_m3_c2 | cal_m3_c3 | cal_m3_c4 | cal_m3_c5 | cal_m3_c6 | cal_m3_c7 | cal_m3_c8 | cal_m6_c1 | cal_m6_c2 | cal_m6_c3 | cal_m6_c4 | cal_m8t24_c0 | cal_m8t24_c1 | prot_m12_c1 | prot_m12_c2 | prot_m12t24_c0 | prot_m12t24_c1 | prot_m3_c1 | prot_m3_c2 | prot_m3_c3 | prot_m3_c4 | prot_m3_c5 | prot_m3_c6 | prot_m3_c7 | prot_m3_c8 | prot_m6_c1 | prot_m6_c2 | prot_m6_c3 | prot_m6_c4 | prot_m8t24_c0 | prot_m8t24_c1 |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Cebu | 1 | 132.15714 | NaN | 97.08333 | 342.6000 | 9.10 | 95.50 | 85.3 | 315.30 | NaN | NaN | NaN | NaN | 52.300 | 238.63333 | NaN | NaN | 52.300 | 238.6333 | 5.3571429 | NaN | 4.3666667 | 11.300000 | 0.65 | 3.65 | 2.6 | 13.15 | NaN | NaN | NaN | NaN | 2.150 | 9.6333333 | NaN | NaN | 2.150 | 9.633333 |