Chapter 6 Nonlinear and Other Regressions

6.1 Logit Regression

6.1.1 Binary Logit

Go back to fan’s REconTools research support package, R4Econ examples page, PkgTestR packaging guide, or Stat4Econ course page.

Data Preparation

df_mtcars <- mtcars

# X-variables to use on RHS

ls_st_xs <- c('mpg', 'qsec')

ls_st_xs <- c('mpg')

ls_st_xs <- c('qsec')

ls_st_xs <- c('wt')

ls_st_xs <- c('mpg', 'wt', 'vs')

svr_binary <- 'hpLowHigh'

svr_binary_lb0 <- 'LowHP'

svr_binary_lb1 <- 'HighHP'

svr_outcome <- 'am'

sdt_name <- 'mtcars'

# Discretize hp

df_mtcars <- df_mtcars %>%

mutate(!!sym(svr_binary) := cut(hp,

breaks=c(-Inf, 210, Inf),

labels=c(svr_binary_lb0, svr_binary_lb1)))6.1.1.1 Logit Regresion and Prediction

logit regression with glm, and predict using estimation data. Prediction and estimation with one variable.

# Regress

rs_logit <- glm(as.formula(paste(svr_outcome, "~", paste(ls_st_xs, collapse="+")))

,data = df_mtcars, family = "binomial")

summary(rs_logit)##

## Call:

## glm(formula = as.formula(paste(svr_outcome, "~", paste(ls_st_xs,

## collapse = "+"))), family = "binomial", data = df_mtcars)

##

## Coefficients:

## Estimate Std. Error z value Pr(>|z|)

## (Intercept) 22.53287 13.93556 1.617 0.1059

## mpg -0.01919 0.33693 -0.057 0.9546

## wt -6.68827 3.02783 -2.209 0.0272 *

## vs -4.38343 2.86743 -1.529 0.1263

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## (Dispersion parameter for binomial family taken to be 1)

##

## Null deviance: 41.381 on 30 degrees of freedom

## Residual deviance: 13.073 on 27 degrees of freedom

## (1 observation deleted due to missingness)

## AIC: 21.073

##

## Number of Fisher Scoring iterations: 7# Predcit Using Regression Data

df_mtcars$p_mpg <- predict(rs_logit, newdata = df_mtcars, type = "response")6.1.1.1.1 Prediction with Observed Binary Input

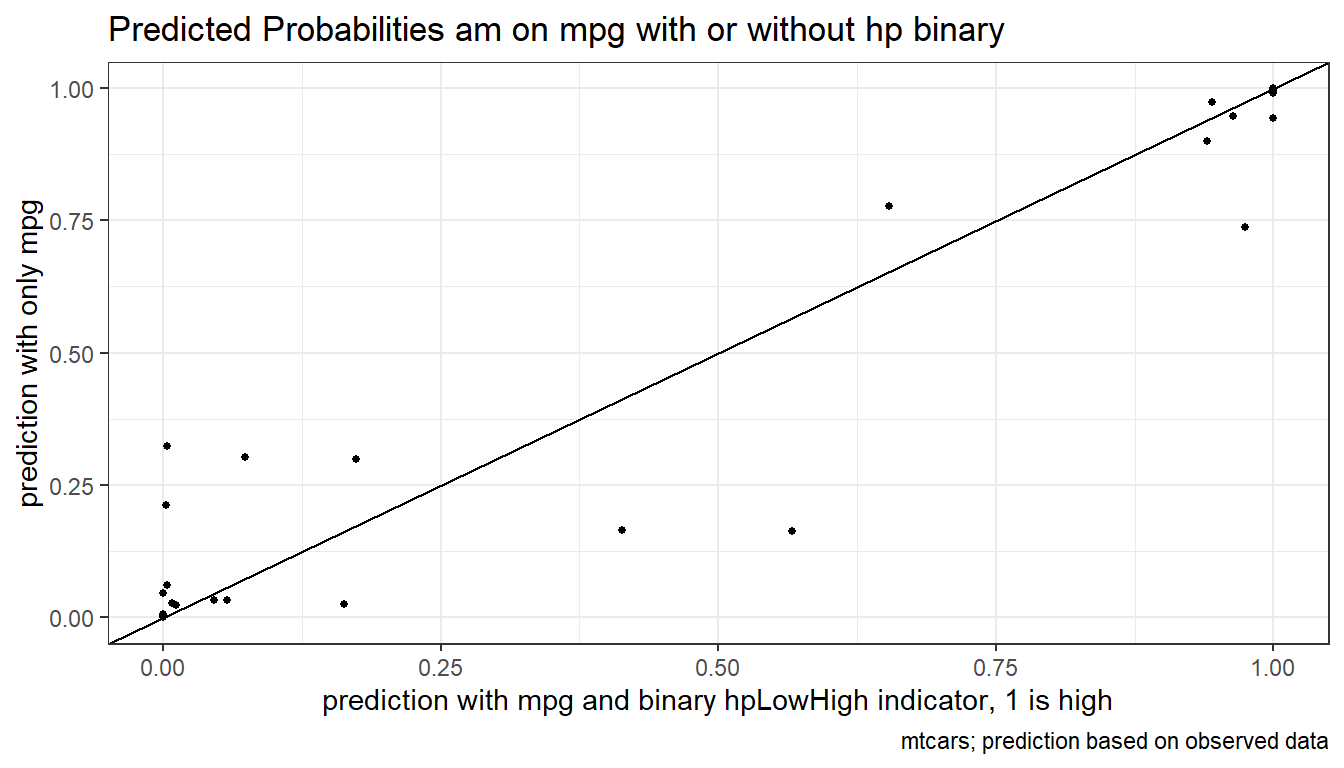

Logit regression with a continuous variable and a binary variable. Predict outcome with observed continuous variable as well as observed binary input variable.

# Regress

rs_logit_bi <- glm(as.formula(paste(svr_outcome,

"~ factor(", svr_binary,") + ",

paste(ls_st_xs, collapse="+")))

, data = df_mtcars, family = "binomial")

summary(rs_logit_bi)##

## Call:

## glm(formula = as.formula(paste(svr_outcome, "~ factor(", svr_binary,

## ") + ", paste(ls_st_xs, collapse = "+"))), family = "binomial",

## data = df_mtcars)

##

## Coefficients:

## Estimate Std. Error z value Pr(>|z|)

## (Intercept) 3.8614 18.0138 0.214 0.8303

## factor(hpLowHigh)HighHP 6.9559 5.5134 1.262 0.2071

## mpg 0.8874 0.8941 0.993 0.3209

## wt -6.6834 3.3355 -2.004 0.0451 *

## vs -5.8324 4.2498 -1.372 0.1699

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## (Dispersion parameter for binomial family taken to be 1)

##

## Null deviance: 41.3808 on 30 degrees of freedom

## Residual deviance: 8.9646 on 26 degrees of freedom

## (1 observation deleted due to missingness)

## AIC: 18.965

##

## Number of Fisher Scoring iterations: 9# Predcit Using Regresion Data

df_mtcars$p_mpg_hp <- predict(rs_logit_bi, newdata = df_mtcars, type = "response")

# Predicted Probabilities am on mgp with or without hp binary

scatter <- ggplot(df_mtcars, aes(x=p_mpg_hp, y=p_mpg)) +

geom_point(size=1) +

# geom_smooth(method=lm) + # Trend line

geom_abline(intercept = 0, slope = 1) + # 45 degree line

labs(title = paste0('Predicted Probabilities ', svr_outcome, ' on ', ls_st_xs, ' with or without hp binary'),

x = paste0('prediction with ', ls_st_xs, ' and binary ', svr_binary, ' indicator, 1 is high'),

y = paste0('prediction with only ', ls_st_xs),

caption = 'mtcars; prediction based on observed data') +

theme_bw()

print(scatter)

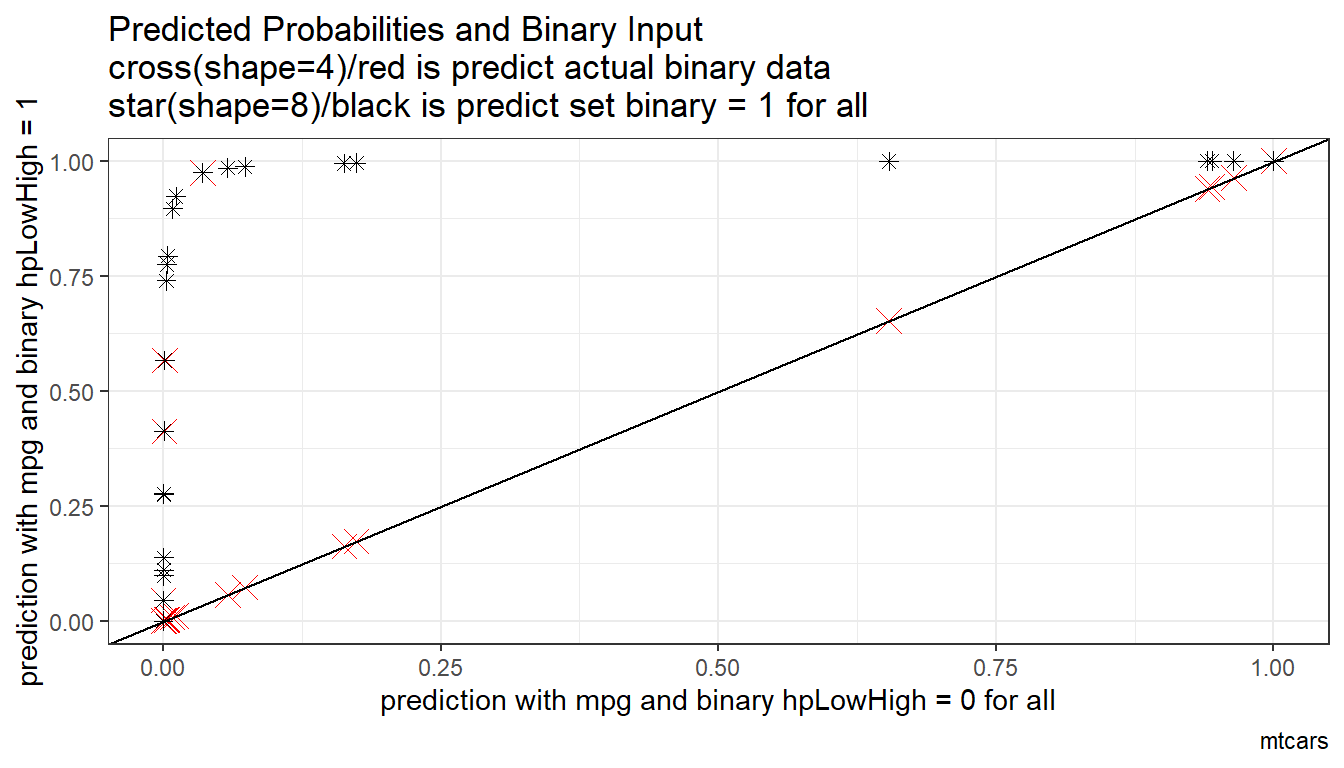

6.1.1.1.2 Prediction with Binary set to 0 and 1

Now generate two predictions. One set where binary input is equal to 0, and another where the binary inputs are equal to 1. Ignore whether in data binary input is equal to 0 or 1. Use the same regression results as what was just derived.

Note that given the example here, the probability changes a lot when we

##

## Call:

## glm(formula = as.formula(paste(svr_outcome, "~ factor(", svr_binary,

## ") + ", paste(ls_st_xs, collapse = "+"))), family = "binomial",

## data = df_mtcars)

##

## Coefficients:

## Estimate Std. Error z value Pr(>|z|)

## (Intercept) 3.8614 18.0138 0.214 0.8303

## factor(hpLowHigh)HighHP 6.9559 5.5134 1.262 0.2071

## mpg 0.8874 0.8941 0.993 0.3209

## wt -6.6834 3.3355 -2.004 0.0451 *

## vs -5.8324 4.2498 -1.372 0.1699

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## (Dispersion parameter for binomial family taken to be 1)

##

## Null deviance: 41.3808 on 30 degrees of freedom

## Residual deviance: 8.9646 on 26 degrees of freedom

## (1 observation deleted due to missingness)

## AIC: 18.965

##

## Number of Fisher Scoring iterations: 9# Two different dataframes, mutate the binary regressor

df_mtcars_bi0 <- df_mtcars %>% mutate(!!sym(svr_binary) := svr_binary_lb0)

df_mtcars_bi1 <- df_mtcars %>% mutate(!!sym(svr_binary) := svr_binary_lb1)

# Predcit Using Regresion Data

df_mtcars$p_mpg_hp_bi0 <- predict(rs_logit_bi, newdata = df_mtcars_bi0, type = "response")

df_mtcars$p_mpg_hp_bi1 <- predict(rs_logit_bi, newdata = df_mtcars_bi1, type = "response")

# Predicted Probabilities and Binary Input

scatter <- ggplot(df_mtcars, aes(x=p_mpg_hp_bi0)) +

geom_point(aes(y=p_mpg_hp), size=4, shape=4, color="red") +

geom_point(aes(y=p_mpg_hp_bi1), size=2, shape=8) +

# geom_smooth(method=lm) + # Trend line

geom_abline(intercept = 0, slope = 1) + # 45 degree line

labs(title = paste0('Predicted Probabilities and Binary Input',

'\ncross(shape=4)/red is predict actual binary data',

'\nstar(shape=8)/black is predict set binary = 1 for all'),

x = paste0('prediction with ', ls_st_xs, ' and binary ', svr_binary, ' = 0 for all'),

y = paste0('prediction with ', ls_st_xs, ' and binary ', svr_binary, ' = 1'),

caption = paste0(sdt_name)) +

theme_bw()

print(scatter)

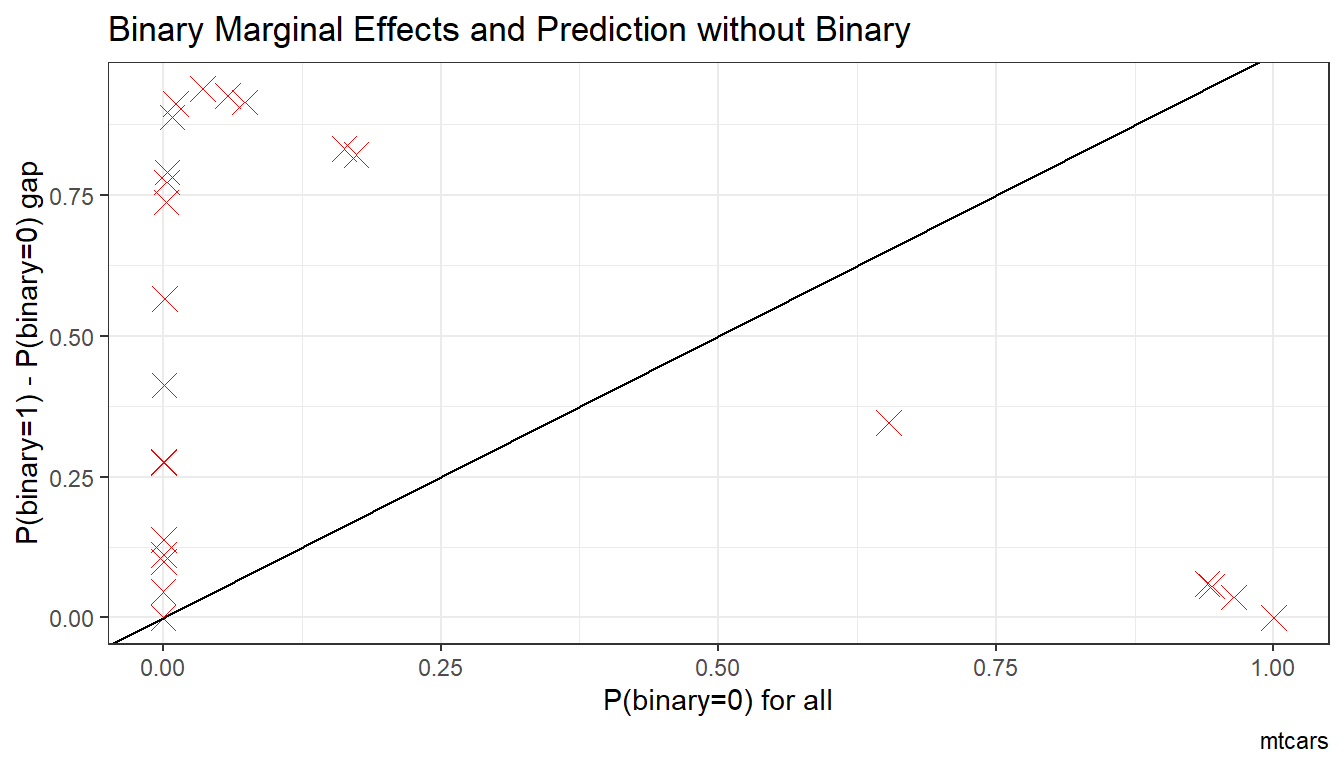

6.1.1.1.3 Prediction with Binary set to 0 and 1 Difference

What is the difference in probability between binary = 0 vs binary = 1. How does that relate to the probability of outcome of interest when binary = 0 for all.

In the binary logit case, the relationship will be hump–shaped by construction between \(A_i\) and \(\alpha_i\). In the exponential wage cases, the relationship is convex upwards.

# Generate Gap Variable

df_mtcars <- df_mtcars %>% mutate(alpha_i = p_mpg_hp_bi1 - p_mpg_hp_bi0) %>%

mutate(A_i = p_mpg_hp_bi0)

# Binary Marginal Effects and Prediction without Binary

scatter <- ggplot(df_mtcars, aes(x=A_i)) +

geom_point(aes(y=alpha_i), size=4, shape=4, color="red") +

geom_abline(intercept = 0, slope = 1) + # 45 degree line

labs(title = paste0('Binary Marginal Effects and Prediction without Binary'),

x = 'P(binary=0) for all',

y = 'P(binary=1) - P(binary=0) gap',

caption = paste0(sdt_name)) +

theme_bw()

print(scatter)

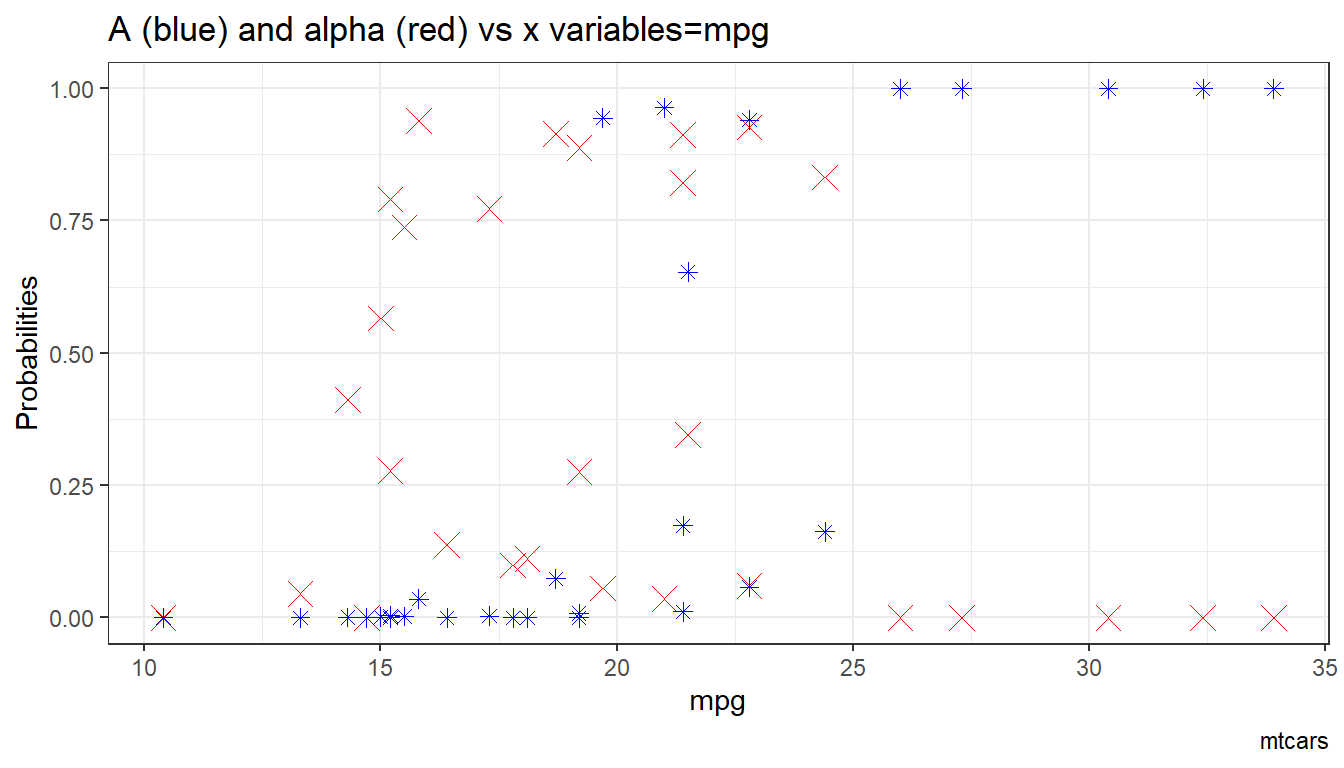

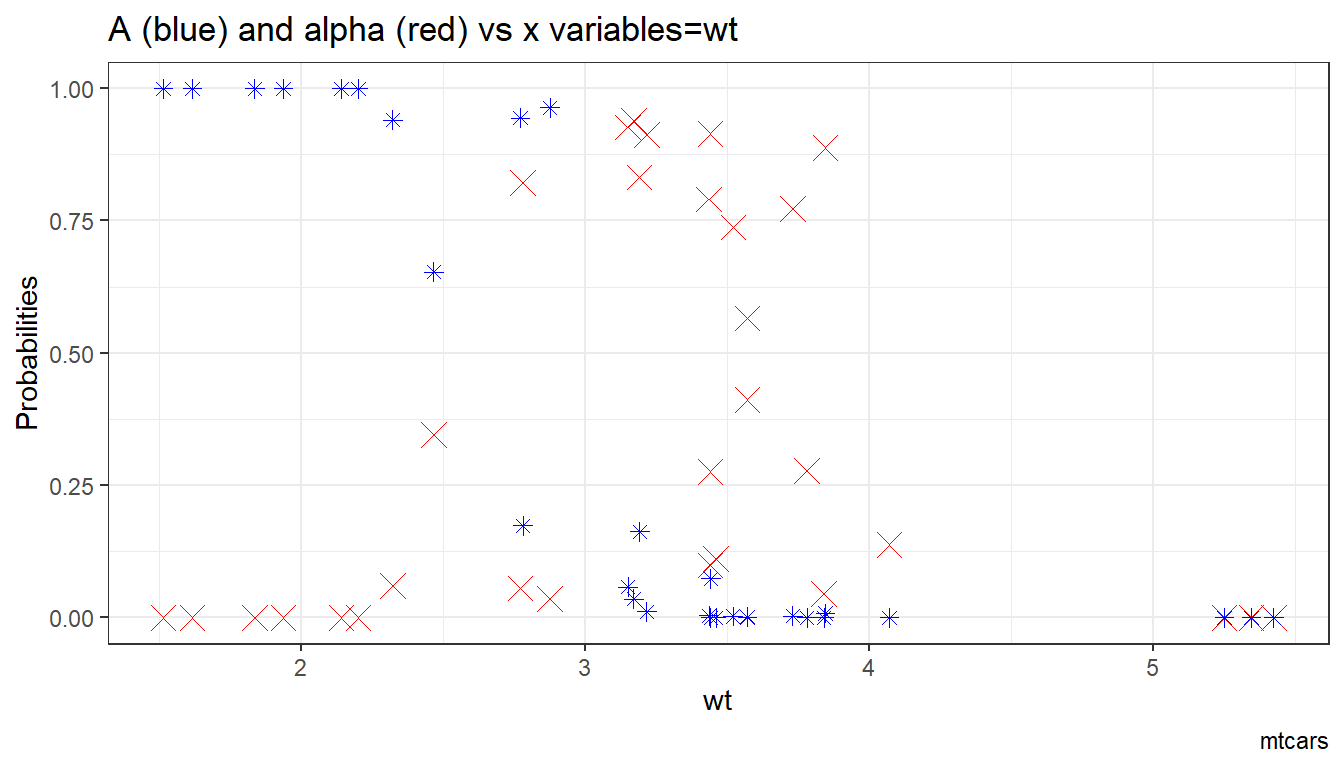

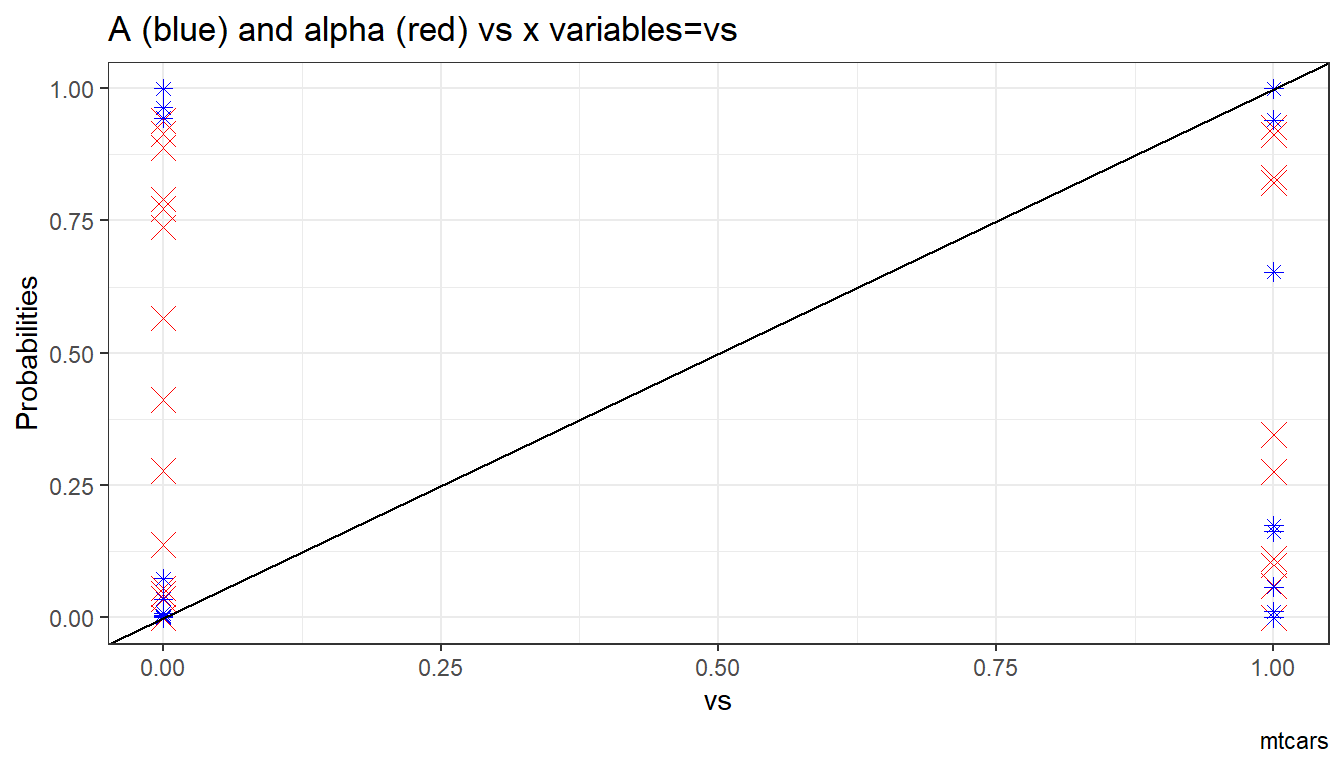

6.1.1.1.4 X variables and A and alpha

Given the x-variables included in the logit regression, how do they relate to A_i and alpha_i

# Generate Gap Variable

df_mtcars <- df_mtcars %>% mutate(alpha_i = p_mpg_hp_bi1 - p_mpg_hp_bi0) %>%

mutate(A_i = p_mpg_hp_bi0)

# Binary Marginal Effects and Prediction without Binary

ggplot.A.alpha.x <- function(svr_x, df,

svr_alpha = 'alpha_i', svr_A = "A_i"){

scatter <- ggplot(df, aes(x=!!sym(svr_x))) +

geom_point(aes(y=alpha_i), size=4, shape=4, color="red") +

geom_point(aes(y=A_i), size=2, shape=8, color="blue") +

geom_abline(intercept = 0, slope = 1) + # 45 degree line

labs(title = paste0('A (blue) and alpha (red) vs x variables=', svr_x),

x = svr_x,

y = 'Probabilities',

caption = paste0(sdt_name)) +

theme_bw()

return(scatter)

}

# Plot over multiple

lapply(ls_st_xs,

ggplot.A.alpha.x,

df = df_mtcars)## [[1]]

##

## [[2]]

##

## [[3]]

6.2 Quantile Regression

6.2.1 Quantile Regression Basics

Go back to fan’s REconTools research support package, R4Econ examples page, PkgTestR packaging guide, or Stat4Econ course page.

6.2.1.1 Estimate Mean and Conditional Quantile Coefficients using mtcars dataset

Here, we conduct tests for using the quantreg package, using the built-in mtcars dataset.

First, estimate the mean (OLS) regression:

##

## Call:

## lm(formula = mpg ~ disp + hp + factor(am) + factor(vs), data = mtcars)

##

## Residuals:

## Min 1Q Median 3Q Max

## -4.8493 -1.9540 0.4649 1.4002 5.4239

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) 25.664864 2.976827 8.622 4.23e-09 ***

## disp -0.008676 0.010053 -0.863 0.39602

## hp -0.040770 0.014085 -2.895 0.00759 **

## factor(am)1 4.624025 1.498995 3.085 0.00479 **

## factor(vs)1 1.454075 1.709450 0.851 0.40275

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 2.801 on 26 degrees of freedom

## (1 observation deleted due to missingness)

## Multiple R-squared: 0.8187, Adjusted R-squared: 0.7908

## F-statistic: 29.35 on 4 and 26 DF, p-value: 2.664e-09Now estimate conditional quantile regressions (not that this remains linear) at various quantiles, standard error obtained via bootstrap. Note that there is a gradient in the quantile hp coefficients as well as disp. disp sign reverses, also the coefficient on factor am is different by quantiles:

ls_fl_quantiles <- c(0.25, 0.50, 0.75)

fit_quantiles <- rq(mpg ~ disp + hp + factor(am),

tau = ls_fl_quantiles,

data = mtcars)

summary(fit_quantiles, se = "boot")##

## Call: rq(formula = mpg ~ disp + hp + factor(am), tau = ls_fl_quantiles,

## data = mtcars)

##

## tau: [1] 0.25

##

## Coefficients:

## Value Std. Error t value Pr(>|t|)

## (Intercept) 25.34665 1.85938 13.63181 0.00000

## disp -0.02441 0.00858 -2.84514 0.00837

## hp -0.01672 0.01655 -1.00987 0.32152

## factor(am)1 1.64389 1.51309 1.08644 0.28689

##

## Call: rq(formula = mpg ~ disp + hp + factor(am), tau = ls_fl_quantiles,

## data = mtcars)

##

## tau: [1] 0.5

##

## Coefficients:

## Value Std. Error t value Pr(>|t|)

## (Intercept) 27.49722 1.91907 14.32839 0.00000

## disp -0.02253 0.01640 -1.37347 0.18090

## hp -0.02713 0.02469 -1.09910 0.28143

## factor(am)1 3.37328 1.92135 1.75568 0.09048

##

## Call: rq(formula = mpg ~ disp + hp + factor(am), tau = ls_fl_quantiles,

## data = mtcars)

##

## tau: [1] 0.75

##

## Coefficients:

## Value Std. Error t value Pr(>|t|)

## (Intercept) 28.52841 1.46520 19.47068 0.00000

## disp 0.00420 0.01299 0.32307 0.74913

## hp -0.06815 0.01612 -4.22901 0.00024

## factor(am)1 8.03935 2.46073 3.26706 0.002966.2.1.2 Test Conditional Quantile Coefficients if Different

Use the rq.anova function frm the quantile regression packge to conduct WALD test. Remember WALD test says given unrestricted model’s estimates, test where null is that the coefficients satisfy some linear restrictions.

To test, use the returned object from running rq with different numbers of quantiles, and set the option joint to true or false. When joint is true: “equality of slopes should be done as joint tests on all slope parameters”, when joint is false: “separate tests on each of the slope parameters should be reported”. A slope parameter refers to one of the RHS variables.

Note that quantile tests are “parallel line” tests. Meaning that we should except to have different x-intercepts for each quantile, because they represents the levels of the conditional shocks distributions. However, if quantile coefficients for the slopes are all the same, then there are no quantile specific effects, mean effects would be sufficient.

see:

6.2.1.2.1 Test Statistical Difference between 25th and 50th Conditional Quantiles

Given the quantile estimates above, the difference between 0.25 and 0.50 quantiles exists, but are they sufficiently large to be statistically different? What is the p-value? Reviewing the results below, they are not statistically different.

First, joint = TRUE. This is not testing if the coefficien on disp is the same as the coefficient on hp. This is testing jointly if the coefficients for different quantiles of disp, and different quantiles of hp are the same for each RHS variable.

ls_fl_quantiles <- c(0.25, 0.50)

fit_quantiles <- rq(mpg ~ disp + hp + factor(am),

tau = ls_fl_quantiles,

data = mtcars)

anova(fit_quantiles, test = "Wald", joint=TRUE)## Quantile Regression Analysis of Deviance Table

##

## Model: mpg ~ disp + hp + factor(am)

## Joint Test of Equality of Slopes: tau in { 0.25 0.5 }

##

## Df Resid Df F value Pr(>F)

## 1 3 59 0.705 0.5529Second, joint = False:

## Quantile Regression Analysis of Deviance Table

##

## Model: mpg ~ disp + hp + factor(am)

## Tests of Equality of Distinct Slopes: tau in { 0.25 0.5 }

##

## Df Resid Df F value Pr(>F)

## disp 1 61 0.0307 0.8615

## hp 1 61 0.5418 0.4645

## factor(am)1 1 61 0.9959 0.32226.2.1.2.2 Test Statistical Difference between 25th, 50th, and 75th Conditional Quantiles

The 1st quartile and median do not seem to be statistically different, now include the 3rd quartile. As seen earlier, the quartiles jointly show a gradient. Now, we can see that idisp, hp and am are separately have statistically different

First, joint = TRUE:

ls_fl_quantiles <- c(0.25, 0.50, 0.75)

fit_quantiles <- rq(mpg ~ disp + hp + factor(am),

tau = ls_fl_quantiles,

data = mtcars)

anova(fit_quantiles, test = "Wald", joint=TRUE)## Quantile Regression Analysis of Deviance Table

##

## Model: mpg ~ disp + hp + factor(am)

## Joint Test of Equality of Slopes: tau in { 0.25 0.5 0.75 }

##

## Df Resid Df F value Pr(>F)

## 1 6 87 3.292 0.005752 **

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1Second, joint = False:

## Quantile Regression Analysis of Deviance Table

##

## Model: mpg ~ disp + hp + factor(am)

## Tests of Equality of Distinct Slopes: tau in { 0.25 0.5 0.75 }

##

## Df Resid Df F value Pr(>F)

## disp 2 91 5.4482 0.005823 **

## hp 2 91 7.2035 0.001247 **

## factor(am)1 2 91 6.8592 0.001680 **

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1